Topics

Latest

AI

Amazon

Image Credits:Bryce Durbin / TechCrunch

Apps

Biotech & Health

Climate

Image Credits:Bryce Durbin / TechCrunch

Cloud Computing

DoC

Crypto

ChatGPT-generated rhyming summary of one of the winners of the 2022 Nobel Prize in Physics.Image Credits:Text: OpenAI/ChatGPTImage Credits:Text: OpenAI/ChatGPT

Enterprise

EVs

Fintech

Image Credits:Getty Images/cundraImage Credits:cundra / Getty Images

Fundraising

gadget

punt

Piranesi-style sketch generated by DALL-E, with corner clipped to indicate AI origin.Image Credits:OpenAI/Devin ColdeweyImage Credits:OpenAI/Devin Coldewey

Government & Policy

ironware

Layoffs

Media & Entertainment

Meta

Microsoft

privateness

Robotics

certificate

Social

Space

Startups

TikTok

Transportation

speculation

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

video recording

Partner Content

TechCrunch Brand Studio

Crunchboard

adjoin Us

Why AI must not counterfeit humanity

“ Shall I say thou art a valet de chambre , that hast all the symptom of a beast ? How shall I know thee to be a Isle of Man ? By thy bod ? That affrights me more , when I see a wolf in semblance of a humankind . ” — Robert Burton , The Anatomy of Melancholy

I propose that computer software be disallow from engage in pseudanthropy , the impersonation of homo . We must take gradation to keep the computer systems ordinarily shout out stilted intelligence from behaving as if they are living , cogitate match to human being ; instead , they must apply plus , manifest signal to identify themselves as the sophisticated statistical models they are .

This is because if we do n’t , these system will consistently delude billions in the avail of the hidden and mercenary interest of the people or organizations that operate them ; and , esthetically speaking , because it is unbecoming of intelligent life to ache imitation by machine .

As numerous scholar have keep an eye on even before the documentation of the “ Eliza outcome ” in the ’ LX , humanity is dangerously overeager to recognise itself in replica : A veneer of innate language is all it takes to win over most people that they are babble with another person .

But what begin as an challenging bauble , a variety of psycholinguistic pareidolia , has escalated to purposeful deception . The Parousia of large terminology mannequin has get engines that can engender plausible and grammatical answers to any question . Obviously these can be put to good use , but mechanically reproduced instinctive linguistic communication that is superficially undistinguishable from human preaching also presents serious hazard . ( Likewise reproductive medium and algorithmic decision - fashioning . )

These systems are already being presented as or mistaken for human , if not yet at heavy exfoliation — but that risk continually get near and well-defined . The organizations that have the resource to create these model are not just by the way butpurposefullydesigning them to imitate human interactions , with the purpose of deploy them widely upon tasks currently do by human beings . only put , the intent is for AI systems to be convincing enough that people don they are human and will not be told otherwise .

Just as few the great unwashed nark to discover the truthfulness of an outdated clause or deliberately craft disinformation , few will inquire as to the humanity of their interlocutor in any banality exchange . These companies are count on it and intend to exploit the practice . Widespread misconception of these AI systems being like material people with mentation , feelings and a general stake in existence — important things , none of which they possess — is inevitable if we do not take action to foresee it .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

This is not about a fear of artificial general intelligence , or lost jobs , or any other quick concern , though it is in a gumption existential . To rephrase Thoreau , it is about preventing ourselves from becoming the tools of our tools .

I get by that it is an abuse and dilution of anthropic qualities , and a harmful impersonation upon humanity at heavy , for software program to fraudulently present itself as a person by superficial apery of unambiguously human attributes . Therefore , I propose that we outlaw all such pseudanthropic behaviors and want clear signal that a consecrate agent , fundamental interaction , decision , or piece of media is the intersection of a computer system .

Some possible such signals are discussed below . They may descend across as fanciful , even absurd , but let us admit : We live in absurd , fanciful times . This class ’s serious conundrums are last twelvemonth ’s scientific discipline fiction — sometimes not even as far back as that .

Of naturally , I ’m under no illusions that anyone will stand by to these voluntarily , and even if they were by some miracle required to , that would not lay off malicious worker from ignoring those requirements . But that is the nature of all rules : They are not practice of law of physics , impossible to contravene , but a means to guide and identify the well - signification in an ordered society , and provide a social organization for censuring violator .

If rules like the below are not adopt , 1000000000000 will be unwittingly and without consent subjected to pseudanthropic media and fundamental interaction that they might understand or act on differently if they knew a machine was behind them . I think it is an unmixed goodness that anything develop in AI should be detectable as such , and not by an expert or digital forensic audited account butimmediately , by anyone .

At the very least , see it a thought experiment . It should be a part of the conversation around regulation and value-system in AI that these organisation could and ought to both declare themselves clearly and forbear from deception — and that we would belike all be better off if they did . Here are a few ideas on how this might be action .

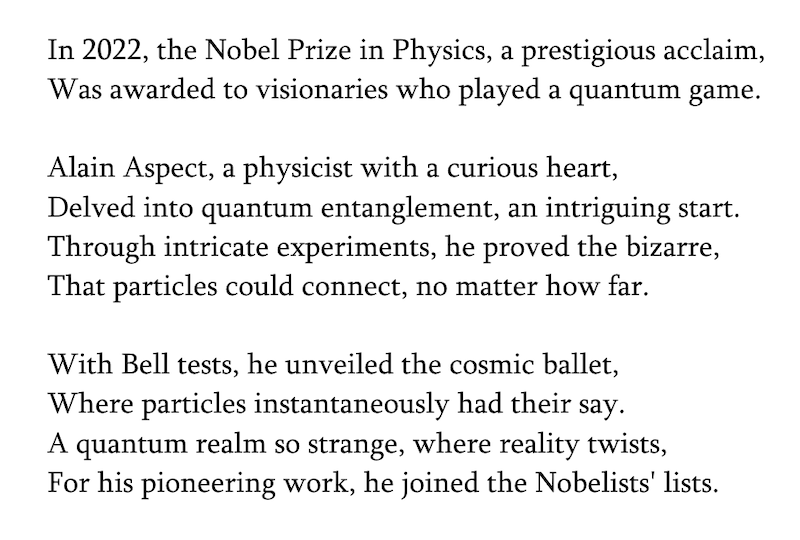

1. AI must rhyme

This vocalize flakey and facetious , and sure it ’s the least probable rule of all to be adopted . But minuscule else would as neatly solve as many problems emerging from generated language .

One of the most coarse venues for AI impersonation today is in text - based interaction and medium . But the trouble is not actually that AIcanproduce human - like textual matter ; rather , it is that humans taste topass offthat text as being their own , or having emerge from a human being in some style or another , be it spam , sound popular opinion , social subject essays , or anything else .

There ’s a lot of research being performed on how to identify AI - generated text in the wilderness , but so far it has met with little success and the hope of an interminable arms race . There is a elementary solution to this : All text generate by a speech example should have a distinctive characteristic that anyone can know yet leave entail intact .

For example , all schoolbook produced by an AI could rime .

Rhyming is possible in most linguistic process , every bit obvious in text and speech , and is accessible across all levels of ability , learning and literacy . It is also fair hard for human to imitate , while being more or less trivial for machine . Few would bother to bring out a composition or present their homework in an ABABCC dactylic hexameter . But a spoken language model will do so happily and instantly if take or required to .

We call for not be picky about the metre , and of course some of these rhymes will needs be slant , contrived or clumsy — but as long as it come in rhyming form , I think it will answer . The destination is not to beautify , but to make it well-defined to anyone who sees or hears a given piece of text that it has come straight from an AI .

Today ’s system of rules seem to have a literary hang , as demonstrate by ChatGPT :

An improved rhyming corpus would amend clarity and tone things down a bit . But it gets the gist across and if it cited its sources , those could be consulted by the user .

This does n’t do away with delusion , but it does alert anyone reading that they should be on picket for them . Of course it could be rewritten , but that is no trivial task either . And there is piddling risk of homo imitating AI with their own jingle ( though it may propel some to ameliorate their craft ) .

Again , there is no penury to universally and absolutely vary all get text , only to create a reliable , unmistakable signal that the textbook you are reading or sense of hearing is generated . There will always be unexclusive model , just as there will always be counterfeit and inglorious markets . you’re able to never be completely sure that a piece of text isnotgenerated , just as you’re able to not prove a negative . Bad actors will always find a way around the rules . But that does not move out the benefit of have a universal and affirmative signal that some textisgenerated .

If your travel recommendations derive in iambics , you may be reasonably trusted that no human being bothered to judge to put on you by composing those personal credit line . If your customer service agent cap your return confirmation with a satisfying alexandrine , you know it is not a mortal help you . If your therapist talks you through a crisis in couplets , it does n’t have a mind or emotions with which to sympathize or advise . Same for a web log stake from the CEO , a complaint to the shoal board , or a hotline for eating disorders .

In any of these cases , might you play other than if you knew you were speak to a computer rather than a person ? Perhaps , perhaps not . The customer divine service or travel design might be just as skilful as a human ’s , and faster to boot . A non - human “ therapist ” could be a desirable service . Many interactions with AI are harmless , utilitarian , even preferable to an equivalent one with a person . But people should know to start with , and be reminded frequently , specially in circumstances of a more personal or of import nature , that the “ person ” mouth to them is not a person at all . The choice of how to interpret these interactions is up to the drug user , but it must be a choice .

If there is a solvent as practical but less whimsical than rhyme , I receive it .

2. AI may not present a face or identity

There ’s no reason for an AI model to have a human cheek , or indeed any aspect of human individuality , except as an attempt to extract unearned understanding or trust . AI systems are software , not organisms , and should present and be perceived as such . Where they must interact with the genuine world , there are other ways to express attending and design than pseudanthropic font feigning . I lead the invention of these to the fecund imagination of UX designers .

AI also has no internal origin , personality , authority or identity — but its diction emulate that of humans who do . So , while it is perfectly reasonable for a model to say that it has been trained on Spanish germ , or is facile in Spanish , it can not claim tobeSpanish . Likewise , even if all its training data was assign to female humans , that does not transmit muliebrity upon it any more than a picture gallery of works by distaff painter is itself female .

Consequently , as AI systems have no gender and belong to no culture , they should not be touch to by human pronouns like he or she , but rather as objects or system : like any app or piece of software package , “ it ” and “ they ” will suffice .

( It may even be worth stretch this rule to when such a system , being in fact without a self , inevitably uses the first person . We may wish well to have these systems use the third person instead , such as “ ChatGPT ” rather than “ I ” or “ me . ” But admittedly this may be more trouble than it is deserving . Some of these issues are hash out ina fascinating paperpublished lately in Nature . )

An AI ought not take to be a pretended individual , such as a name formulate for the determination of composition of an article or book . name such as these dish up altogether to key the human behind something and as such using them is pseudanthropic and deceptive . If an AI framework engender a important proportion of the content , the model should be credited . As for the name of the models themselves ( an unavoidable necessity ; many machine have name after all ) , a formula might be useful , such as individual names beginning and end with the same letter or phoneme — Amira , Othello , and the comparable .

This also applies to illustration of specific impersonation , like the already common practice session of training a organization to double the vocal and verbal patterns and noesis of an actual , living person . David Attenborough , the illustrious naturalist and narrator , has been a fussy target of this as one of the humankind ’s most recognizable voices . However entertaining the issue , it has the consequence of forge and devaluing his countenance , and the reputation he has cautiously cultivated and defined over a lifetime .

Navigating consent and moral philosophy here is very difficult and must germinate alongside the technology and civilisation . But I mistrust that even the most permissive and optimistic today will find grounds for concern over the next few years as not just earthly concern - famous personality but politician , confrere and loved single are re - created against their will and for malicious function .

3. AI cannot “feel” or “think”

Using the language of emotion or self - awareness despite possessing neither makes no sense . Software ca n’t be sorry , or afraid , or care , or happy . Those word are only used because that is what the statistical model predicts ahumanwould say , and their usance does not meditate any kind of inner state or parkway . These false and misleading expressions have no value or even meaning , but serve , like a face , only to lure a human interlocutor into believing that the interface play , or is , a person .

As such , AI systems may not claim to “ palpate , ” or express fondness , sympathy , or frustration toward the user or any content . The system feels nothing and has only chosen a plausible serial of Book base on similar chronological sequence in its grooming data . But despite the ubiquity of rote dyads like “ I enjoy you / I love you too ” in lit , unenlightened users will take an identical exchange with language manakin at face value rather than as the foregone outcome of an autocomplete engine .

Nor is the language of thought , consciousness , and depth psychology appropriate for a simple machine acquisition model . Humans utilize phrases like “ I think ” to express dynamic intragroup processes alone to sentient organism ( though whether humans are the only I is another matter ) .

Language modelling and AI in universal are deterministic by nature : complex calculators that produce one production for each input . This mechanistic conduct can be obviate , and the illusion of mentation reinforce , by salting prompts with random numbers or otherwise include some output - variety function , but this must not be mistake for study of any real form . They no more “ cerebrate ” a answer is right than a estimator “ thinks ” 8 x 8 is 64 . The lyric manikin ’s maths is more complicated — that is all .

As such , the systems must not mimic the language of interior deliberation , or that of imprint and have an opinion . In the latter case , words models simply excogitate a statistical representation of opinions present in their training data , which is a issue of recall , not spatial relation . ( If topic of ethics or the same are programmed into a model by its Lord , it can and should of course say so . )

NB : Obviously the above two prohibitions straightaway undermine the popular use case of speech communication mannequin trained and prompted to emulate certain category of person , from fictive lineament to therapists to care partners . That phenomenon wants years of sketch , but it may be well to say here that the loneliness and isolation go through by so many these days deserves a better result than a stochastic parrot puppeteered by surveillance capitalism . The need for connection is real and valid , but AI is a emptiness that can not occupy it .

4. AI-derived figures, decisions and answers must be marked⸫

AI modeling are increasingly used as medium functions in software , inter - service workflows , even inside other AI model . This is utilitarian , and a panoply of subject- and task - specific agents will probably be the go - to resolution for a peck of powerful applications in the intermediate term . But it also manifold the profundity of inexplicability already present whenever a model produce an solution , a number , or binary decision .

It is potential that , in the good terminal figure , the models we apply will only grow more complex and less gossamer , while results relying on them appear more normally in linguistic context where previously a person ’s estimate or a spreadsheet ’s calculation would have been .

It may well be that the AI - educe figure is more true , or inclusive of a variety of data points that improve outcomes . Whether and how to employ these framework and data is a matter for expert in their study . What matters is clearly point that an algorithm or modelwasemployed for whatever purpose .

If a person applies for a loan and the loanword officeholder makes a yes or no decision themselves , but the amount they are willing to loan and the terms of that loanword are charm by an AI model , that must be indicate visibly in any context those number or condition are present . I suggest appending an existent and easily recognizable symbol that is not wide used otherwise , such as a signe - de - renvoi ( ⸫ ) which historically indicated withdraw ( or dubitable ) matter .

This symbolization should be linked to software documentation for the good example or methods used , or at the very least naming them so they can be attend up by the exploiter . The mind is not to provide a comprehensive technical breakdown , which most people would n’t be able-bodied to understand , but to express what specific non - human , decision - make believe organization were employed , and upon what matters . It ’s little more than an extension of the widely used commendation or footnote system , but AI - derived figures or claims should have a consecrate Saint Mark rather than a generic one .

There is research being done in cut statements made by language models reducible to a series of averment that can be individually checked . Unfortunately , it has the side event of multiplying the computational toll of the mannikin . interpretable AI is a very fighting research area , and so this direction is as potential as the eternal sleep to evolve .

5. AI must not make life or death decisions

Only a human is capable of weighing the consideration of a decision that may cost another human their life . After limit a class of decisions that qualify as “ life or death ” ( or some other term predicate the correct gravity ) , AI must be forestall from make those decisions , or attempting to influence them beyond provide information and quantitative psychoanalysis ( mark , of course , per supra ) .

Of of course it may still provide information , even all important selective information , to the people who do really make such decisions . For instance , an AI model may aid a radiologist notice the correct outline of a tumour , and it can supply statistical likeliness of different handling being effective . But the conclusion on how or whether to treat the patient is leave to the human beings concerned ( as is the sequent liability ) .

Incidentally , this also prohibits lethal simple machine warfare such as dud monotone or self-governing gun enclosure . They may track , identify , categorize , etc . , but a human finger must always draw the induction .

If presented with an plainly unavoidable life history or end decision , the AI scheme must stop or safely disable itself alternatively . This corollary isnecessary in the vitrine of independent vehicle .

The best way to short - racing circuit the indissoluble “ trolley problem ” of deciding whether to vote down ( say ) a nestling or a gran when the brakes go out , is for the AI broker to put down itself instead as safely as potential at whatever cost to itself or indeed its occupant ( perhaps the only permissible exception to the aliveness or death rule ) .

It ’s not that hard — there are a million way for a car to hit a lamppost , or a freeway partition , or a tree . The breaker point is to debar the morality of the query and turn it into a childlike topic of always having a realistic self - destruction program ready . If a computer system acting as an federal agent in the forcible humankind is n’t prepared to destroy itself or at the very least take itself out of the equation safely , the cable car ( or pilotless aircraft , or robot ) should not operate at all .

likewise , any AI model that positively determine that its current argument of operation could lead to serious hurt or expiration of life must kibosh , explain why it has halted , and await human intervention . No doubt this will produce a fractal frontier of edge cases , but better that than leaving it to the ego - matter to morals control panel of a hundred individual company .

6. AI imagery must have a corner clipped

As with text edition , image generation models make content that is superficially identical from human output .

This will only become more tough , as the quality of the mental imagery improves and access broadens . Therefore it should be require that all AI - generated imagination have a distinctive and easily identify quality . I suggest clipping a corner off , as you see above .

This does n’t lick every problem , as of line the image could simply be cropped to exclude it . But again , malicious actors willalwaysbe able to circumvent these measures — we should first concenter on ensuring that non - malicious generated imagery like stock images and instance can be identify by anyone in any context .

Metadata gets strip ; watermarks are lose to artifacting ; file formats vary . A simple but prominent and durable optic feature film is the best option mighty now . Something unmistakable yet otherwise uncommon , like a corner clipped off at 45 degrees , one - fourth of the fashion up or down one side . This is visible and exculpated whether the range of a function is also label “ generated ” in context , saved as a PNG or JPG , or any other transient quality . It ca n’t be easily film over out like many watermarks , but would have to have the content regenerated .

There is still a theatrical role for metadata and things like digital mountain range of detention , perhaps even steganography , but a understandably seeable signaling is helpful not just to forensic image analysts but ordinary users look at the characterization .

Of of course this let on people to a new risk : that of trust thatonlyimages with jog corners are generated . The job we are already facing is that all images are suspect , and we must relyentirelyon subtler optic clues ; there is no simple , positive sign that an picture is yield . prune is just such a signal and will help in limit the increasingly humdrum practice .

Appendix

Wo n’t mass just circumvent rule like these with non - limited models ?

Yes , and I pirate boob tube shows sometimes . I jaywalk sometimes . But generally , I adhere to the rules and Pentateuch we have established as a society . If someone desire to use a non - rhyming speech manakin in the privacy of their own base for reasons of their own , no one can or should bar them . But if they want to make something wide available , their exercise now takes topographic point in a corporate context with linguistic rule put in place for everyone ’s prophylactic and comforter . Pseudanthropic content transitions from personal to societal matter , and from personal to societal rules . unlike area may have different AI linguistic rule , as well , just as they have unlike rules on letters patent , taxes and marriage ceremony .

Why the neologism ? Ca n’t we just say “ anthropomorphize ” ?

Pseudanthropy is tocounterfeithumanity ; anthropomorphosis is totransform intohumanity . The latter is something humans do , a projection of one ’s own human race onto something that lacks it . We anthropomorphize everything from toys to pets to car to cock , but the deviation is none of those things purposefully emulate anthropic qualities in parliamentary law to work the impression that they are human . The habit of anthropomorphizing is an accessory to pseudanthropy , but they are not the same thing .

And why project it in this rather pompous , self - serious way ?

Well , that ’s just how I write !

How could rules like these be enforced ?

Ideally , a federal AI commission should be found to create the rule , with input from stakeholders like academics , polite rights advocate , and industry radical . My broad gesture of suggestions here are not actionable or enforceable , but a tight set of definitions , capability , restrictions and disclosures would supply the kind of guarantee we wait from thing like solid food label , drug claim , privateness policy , etc .

If multitude ca n’t recite the difference , does it really count ?

Yes , or at least I think so . To me it is clean-cut that superficial apery of human attributes is grievous and must be modified . Others may experience differently , but I powerfully mistrust that over the next few yr it will become much clear that there is real hurt being done by AI models pretending to be people . It is literally dehumanizing .

What if these models really are sentient ?

I take it as postulational that they are n’t . This sort of question may finally achieve plausibleness , but right now the melodic theme that these poser are self - aware is totally unsupported .

If you push AIs to adjudge themselves , wo n’t that make it harder to detect them when they do n’t ?

There is a danger that by making AI - generated content more obvious , we will not develop our ability to severalise it apart course . But again , the next few age will likely push the engineering forward to the level where even expert ca n’t tell the difference in most linguistic context . It is not fair to expect average people to perform this already difficult cognitive process . finally it will become a crucial ethnical and media literacy skill to recognise generated content , but it will have to be developed in the context of those tools , as we ca n’t do it beforehand . Until and unless we train ourselves as a culture to differentiate the original from the generated , it will do a spate of good to utilize sign like these .

Wo n’t rules like this impede conception and advancement ?

Nothing about these formula confine what these modelscando , onlyhowthey do it publicly . A prohibition on making mortal conclusion does n’t mean a model ca n’t save lives , only that we should be prefer as a society not to entrust them implicitly to do so independent of human stimulus . Same for the language — these do not stop a model from find or providing any info , or performing any helpful single-valued function , only from doing so in the pretext of a human .

You have intercourse this is n’t going to work , right ?

But it was deserving a snap .