Topics

late

AI

Amazon

Image Credits:AWS

Apps

Biotech & Health

Climate

Image Credits:AWS

Cloud Computing

mercantilism

Crypto

Image Credits:AWS

initiative

EVs

Fintech

Image Credits:AWS

Fundraising

gizmo

Gaming

Image Credits:AWS

Government & Policy

computer hardware

Layoffs

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

Security

societal

Space

startup

TikTok

Transportation

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

video

Partner Content

TechCrunch Brand Studio

Crunchboard

touch Us

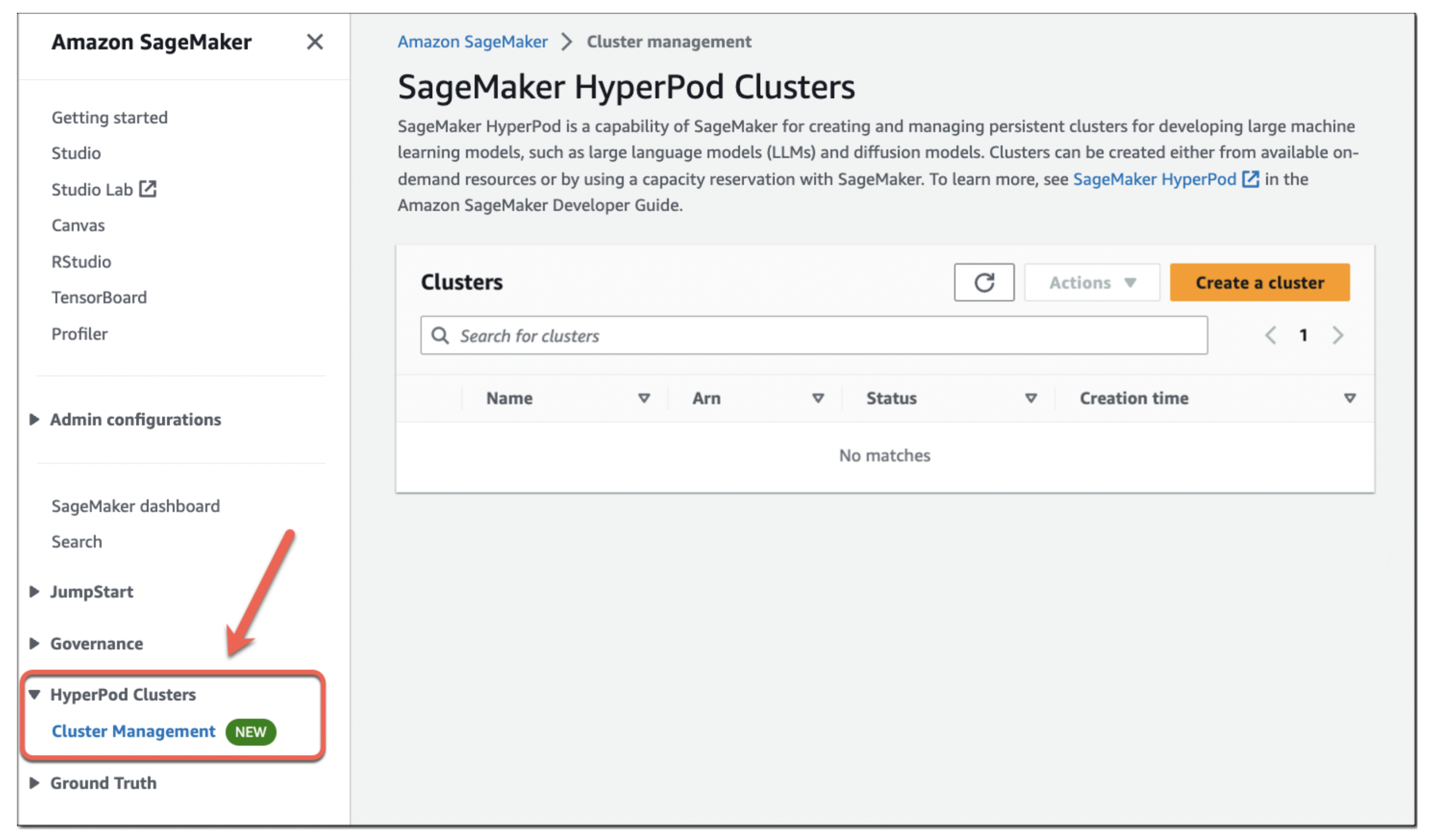

At its re : Invent conference today , Amazon ’s AWS cloud arm announced the launching ofSageMaker HyperPod , a new purpose - work up service for preparation and very well - tuning great speech models ( LLMs ) . SageMaker HyperPod is now by and large usable .

Amazon has long bet onSageMaker , its service for building , training and deploying machine learning role model , as the lynchpin of its motorcar encyclopaedism scheme . Now , with the advent of reproductive AI , it ’s perhaps no surprisal that it is also lean on SageMaker as the core product to make it soft for its users to train and o.k. - tune LLMs .

“ SageMaker HyperPod gives you the ability to create a allot bunch with accelerated representative that ’s optimize for distributed training , ” Ankur Mehrotra , AWS ’ cosmopolitan manager for SageMaker , severalise me in an interview ahead of today ’s announcement . “ It gives you the creature to efficiently disperse models and data across your cluster — and that bucket along up your grooming process . ”

He also remark that SageMaker HyperPod permit users to frequently write checkpoints , allow them to pause , analyze and optimize the training appendage without ingest to start over . The service also includes a number of fail - safes so that when a GPUs depart down for some reason , the full breeding unconscious process does n’t miscarry , too .

“ For an ML squad , for instance , that ’s just interested in training the model — for them , it becomes like a zero - signature experience and the cluster becomes sort of a self - healing cluster in some sense , ” Mehrotra explicate . “ Overall , these capabilities can help you train founding models up to 40 % faster , which , if you cogitate about the price and the time to market , is a huge differentiator . ”

user can opt to prepare on Amazon ’s own custom Trainium ( and now Trainium 2 ) chips or Nvidia - based GPU instance , including those using the H100 processor . The company foretell that HyperPod can speed up the training process by up to 40 % .

The company already has some experience with this using SageMaker for build Master of Laws . The Falcon 180B theoretical account , for example , wastrained on SageMaker , using a clustering of thousands of A100 GPUs . Mehrotra remark that AWS was capable to take what it acquire from that and its previous experience with scale SageMaker to build HyperPod .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Perplexity AI ’s conscientious objector - founder and CEO Aravind Srinivas narrate me that his company receive former access to the service during its individual beta . He note that his team was initially skeptical about using AWS for preparation and fine - tune its models .

“ We did not solve with AWS before , ” he said . “ There was a myth — it ’s a myth , it ’s not a fact — that AWS does not have enceinte substructure for large model grooming and obviously we did n’t have time to do due diligence , so we believe it . ” The team got connect with AWS , though , and the engineers there asked them to test the service out ( for destitute ) . He also observe that he has find it sluttish to get support from AWS — and access to enough GPUs for Perplexity ’s use case . It obviously help that the team was already familiar with doing illation on AWS .

Srinivas also stressed that the AWS HyperPod team focused strongly on accelerate up the interconnects that link Nvidia ’s graphics placard . “ They went and optimize the primitives — Nvidia ’s various primitive — that allow you to transmit these gradient and parameters across different nodes , ” he explained .