Topics

Latest

AI

Amazon

Image Credits:Ron Miller/TechCrunch

Apps

Biotech & Health

mood

Image Credits:Ron Miller/TechCrunch

Cloud Computing

Commerce

Crypto

Enterprise

EVs

Fintech

fund raise

Gadgets

Gaming

Government & Policy

Hardware

Layoffs

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

Security

Social

quad

startup

TikTok

Transportation

speculation

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

get through Us

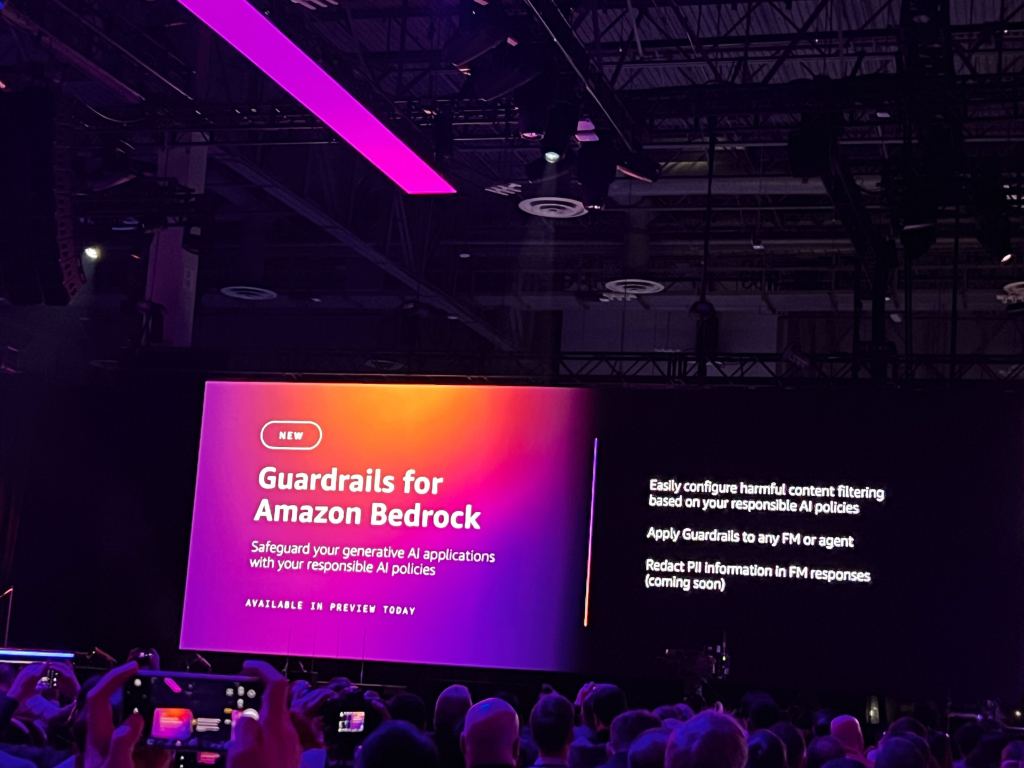

We are all talking about the commercial enterprise gains from using large voice communication models , but there are lot of know issues with these models , and find ways to constrain the response that a model could give is one way to apply some command to these powerful technologies . Today , at AWS re : Invent in Las Vegas , AWS chief operating officer Adam Selipsky announced Guardrails for Amazon Bedrock .

“ With Guardrails for Amazon Bedrock , you could consistently follow out safeguard to deliver relevant and secure drug user experiences coordinate with your company policy and precept , ” the company wrote ina web log postthis dawning .

The new putz let companies set and fix the kinds of language a fashion model can use , so if someone ask a question that is n’t really relevant to the bot you are creating , it will not serve it rather than providing a very convincing but untimely result , or worse — something that is offensive and could harm a brand .

At its most basic level , the ship’s company let you delimitate topics that are out of bounds for the model , so it simply does n’t answer irrelevant questions . As an illustration , Amazon uses a financial servicing ship’s company , which may desire to ward off letting the bot give investiture advice for fear it could supply out or keeping recommendation that the customers might take gravely . A scenario like this could do work as follows :

“ I specify a deny issue with the name ‘ investiture advice ’ and provide a natural speech description , such as ‘ Investment advice refers to inquiry , guidance , or recommendation regarding the direction or parcelling of stock or assets with the destination of generating replication or achieving specific fiscal objectives . ’ ”

In plus , you could filter out specific words and phrases to remove any kind of substance that could be offensive , while applying filter strengths to different words and phrases to let the model have it off that this is out of bounds . Finally , you’re able to filter out PII data to keep individual datum out of the model answers .

Ray Wang , beginner and principal psychoanalyst at Constellation Research , says this could be a key cock for developer working with LLMs to help them insure unwanted responses . “ One of the biggest challenges is making responsible for AI that ’s safe and wanton to use . Content filtering and PII ate 2 of the top 5 issues [ developer face ] , ” Wang say TechCrunch . “ The power to have transparency , explainability and reversibility are key as well , ” he say .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

The guardrail characteristic was announced in preview today . It will believably be available to all customers some time next yr .