Topics

Latest

AI

Amazon

Image Credits:Shy Kids

Apps

Biotech & Health

Climate

Image Credits:Shy Kids

Cloud Computing

Commerce

Crypto

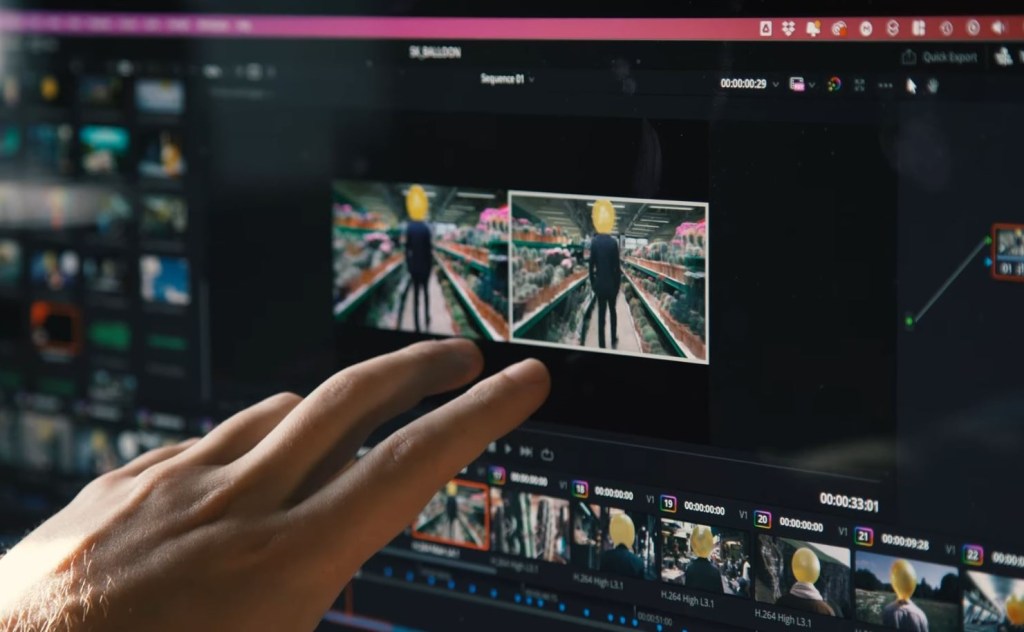

Example of a shot as it came out of Sora and how it ended up in the short.Image Credits:Shy Kids

Enterprise

EVs

Fintech

Fundraising

gismo

Gaming

Government & Policy

Hardware

layoff

Media & Entertainment

Meta

Microsoft

privateness

Robotics

Security

Social

Space

inauguration

TikTok

Transportation

Venture

More from TechCrunch

issue

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

meet Us

OpenAI ’s video multiplication pecker Soratook the AI community of interests by surprisal in Februarywith mobile , realistic video that seems air mile ahead of competition . But the cautiously stage - managed debut left out a bunch of details — item that have been occupy in by a film producer given other accession to create a unforesightful using Sora .

Shy Kids is a digital production team based in Toronto that was pick by OpenAI as one of a fewto grow short filmsessentially for OpenAI promotional purposes , though they were commit considerable creative freedomin create “ aura head . ”In aninterview with visual effects news outlet fxguide , post - production creative person Patrick Cederberg describe “ actually using Sora ” as part of his work .

Cederberg ’s interview is interesting and quite non - technical , so if you ’re interested at all , manoeuvre over to fxguide and read it . But here are some interesting nuggets about using Sora that tell us that , as impressive as it is , the model is perhaps less of a giant leaping forrad than we thought .

ascendance is still the thing that is the most desirable and also the most knotty at this point . … The close we could get was just being hyper - descriptive in our prompt . Explaining closet for characters , as well as the character of balloon , was our means around body because shot to shot / contemporaries to generation , there is n’t the feature typeset in place yet for full control over consistency .

In other parole , matter that are simple-minded in traditional filmmaking , like choosing the colour of a character ’s clothing , take elaborated workarounds and checks in a procreative system , because each shot is created autonomous of the others . That could manifestly change , but it is sure enough much more laborious at the import .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Sora outputs had to be determine for unwanted elements as well : Cederberg distinguish how the simulation would routinely father a fount on the balloon that the main eccentric has for a head , or a drawstring hanging down the front . These had to be absent in place , another sentence - devour cognitive process , if they could n’t get the prompt to exclude them .

accurate timing and movements of characters or the television camera are n’t really potential : “ There ’s a lilliputian bit of temporal controller about where these dissimilar military action happen in the real generation , but it ’s not exact … it ’s kind of a injection in the shadow , ” said Cederberg .

For illustration , time a gesture like a undulation is a very approximate , suggestion - driven process , unlike manual animations . And a shot like a cooking pan upward on the grapheme ’s body may or may not reflect what the filmmaker want — so the team in this case rendered a shot compose in portrait orientation and did a crop pan in post . The give clips were also often in slow motion for no particular understanding .

In fact , using the daily voice communication of filmmaking , like “ tear apart rightfield ” or “ trailing shot ” were inconsistent in general , Cederberg said , which the squad find reasonably surprising .

“ The investigator , before they approached artists to play with the shaft , had n’t really been think like filmmakers , ” he said .

As a result , the team did hundreds of generations , each 10 to 20 bit , and ended up using only a handful . Cederberg estimate the ratio at 300:1 — but of course we would probably all be surprised at the ratio on an ordinary shoot .

The last interesting wrinkle concern to copyright : If you demand Sora to give you a “ Star Wars ” snip , it will refuse . And if you examine to get around it with “ habilimented man with a laser sword on a retro - futurist spaceship , ” it will also refuse , as by some mechanics it recognizes what you ’re trying to do . It also refused to do an “ Aronofsky type shot ” or a “ Hitchcock zoom . ”

On one handwriting , it make gross sense . But it does prompt the question : If Sora knows what these are , does that mean the model was trained on that depicted object , the proficient to recognise that it is infringing ? OpenAI , which observe its training data cards close to the vest — to the point of absurdity , as withCTO Mira Murati ’s audience with Joanna Stern — will almost sure as shooting never tell us .

As for Sora and its use in filmmaking , it ’s distinctly a powerful and utilitarian tool in its situation , but its place is not “ creating films out of whole cloth . ” Yet . As another scoundrel once splendidly articulate , “ that come later . ”