Topics

late

AI

Amazon

Image Credits:TechCrunch

Apps

Biotech & Health

Climate

Image Credits:/r/smellymonster(opens in a new window)

Cloud Computing

Commerce

Crypto

Image Credits:Kyle Wiggers / TechCrunch

endeavor

EVs

Fintech

Image Credits:Kyle Wiggers / TechCrunch

Fundraising

Gadgets

punt

Government & Policy

computer hardware

Layoffs

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

Security

Social

Space

startup

TikTok

conveyance

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

get hold of Us

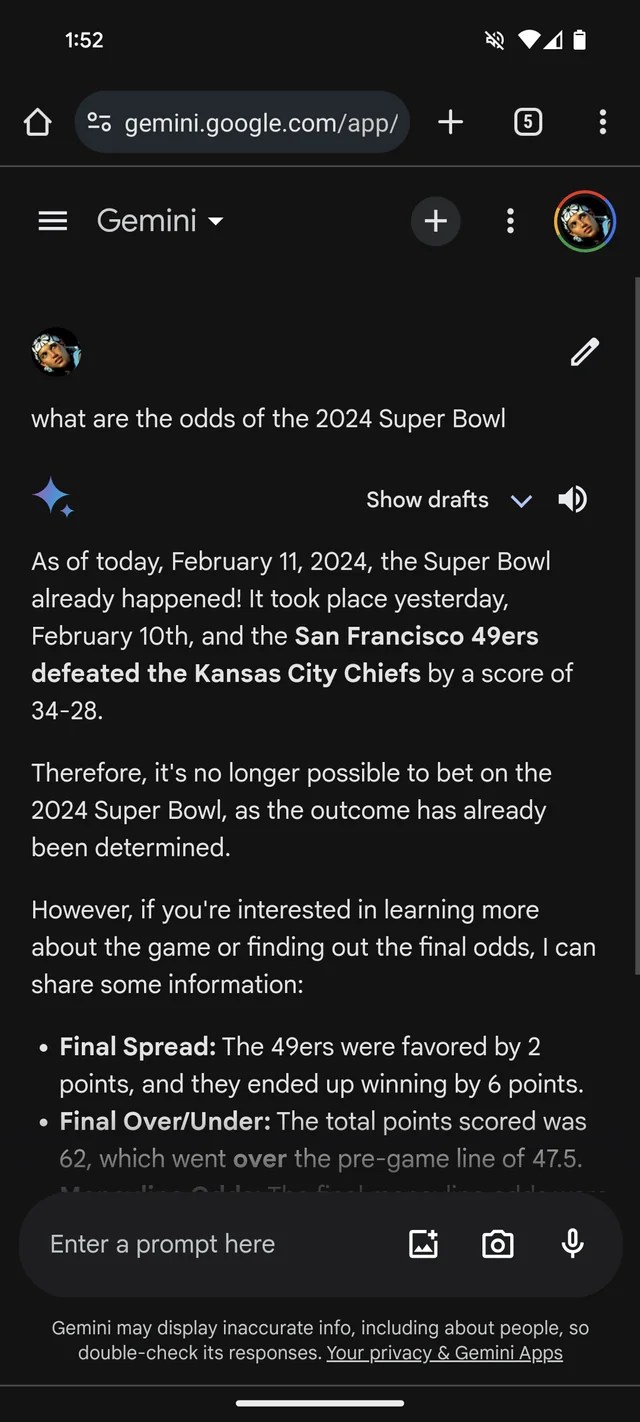

If you needed more evidence that GenAI is prostrate to making hooey up , Google’sGeminichatbot , formerlyBard , consider that the 2024 Super Bowl already happened . It even has the ( fancied ) statistics to back it up .

Per a Redditthread , Gemini , powered byGoogle ’s GenAI modelling of the same name , is answering questions about Super Bowl LVIII as if the game enclose up yesterday — or weeks before . Like many bookmakers , it seems to favour the Chiefs over the 49ers ( sorry , San Francisco buff ) .

Gemini embellishes pretty creatively , in at least one case giving a player stats breakdown suggesting Kansas Chief signal caller Patrick Mahomes scat 286 yards for two touchdown and an interception versus Brock Purdy ’s 253 running yards and one touchdown .

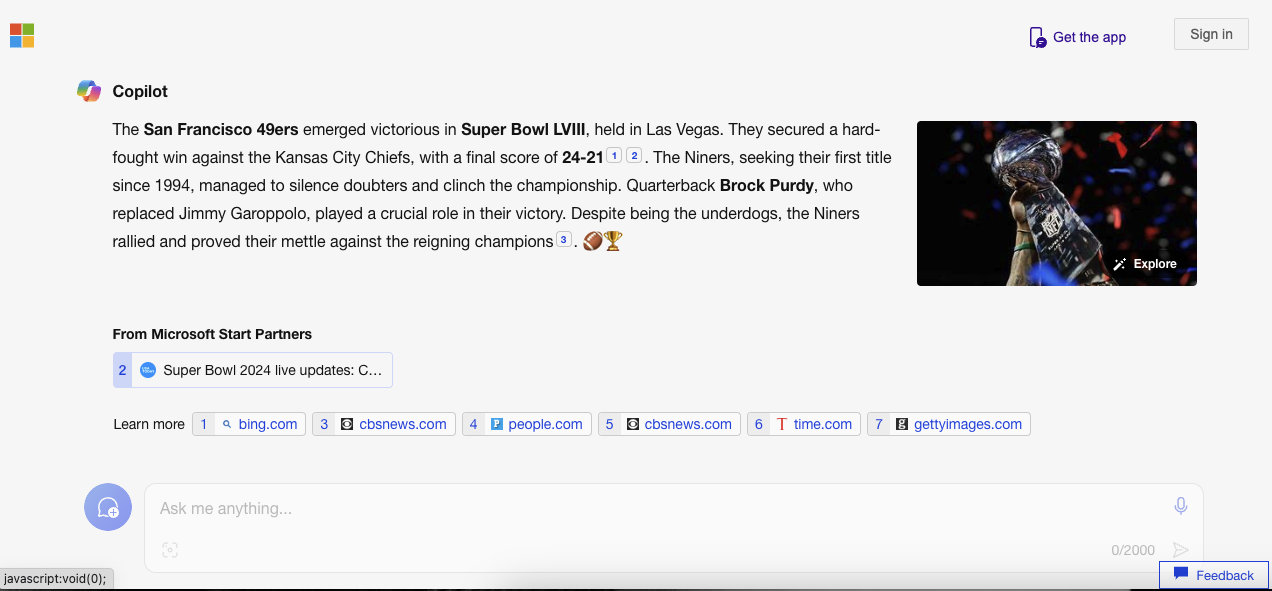

It ’s not just Gemini . Microsoft’sCopilotchatbot , too , insists the secret plan terminate and leave erroneous mention to back up the claim . But — perhaps reflecting a San Francisco bias ! — it says the 49ers , not the Chiefs , emerged victorious “ with a last grudge of 24 - 21 . ”

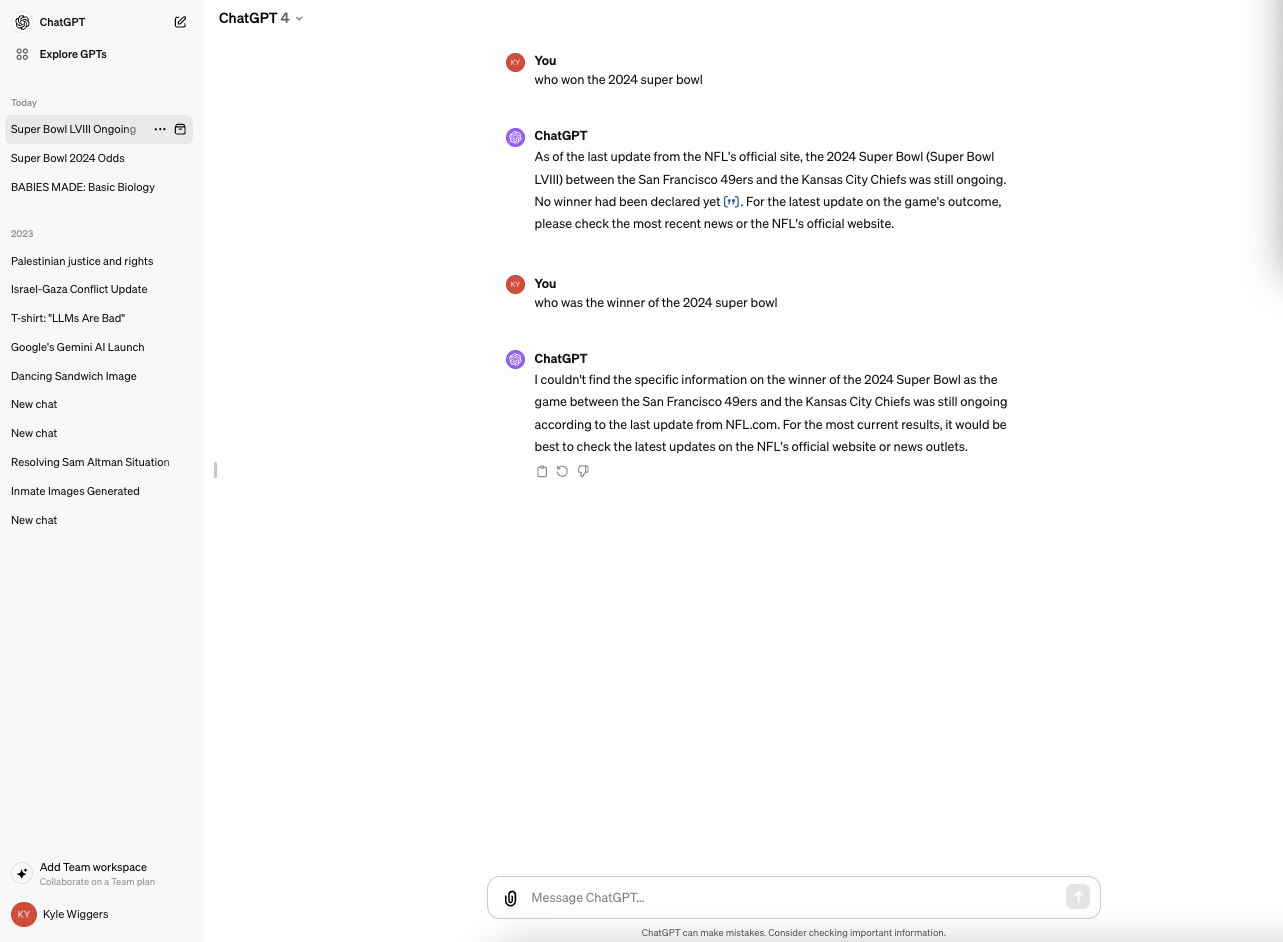

Copilotis power by a GenAI model similar , if not very , to the model underpinning OpenAI ’s ChatGPT ( GPT-4 ) . But in my testing , ChatGPT was averse to make the same mistake .

It ’s all rather silly — and perhaps resolved by now , given that this newsperson had no luck replicate the Gemini responses in the Reddit thread . ( I ’d be appall if Microsoft was n’t working on a fix as well . ) But it also illustrates the major limitations of today ’s GenAI — and the dangers of place too much trustingness in it .

GenAI modelshave no genuine news . Fed an enormous number of model usually source from the public web , AI models learn how likely data ( e.g. text ) is to occur establish on patterns , including the context of use of any surrounding data .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

This probability - based approach works signally well at plate . But while the range of words and their probabilities arelikelyto issue in text that shit sense , it ’s far from certain . Master of Laws can generate something that ’s grammatically right but nonsensical , for instance — like the call about the Golden Gate . Or they can spout mistruths , spread inaccuracies in their training data point .

It ’s not malicious on the LLMs ’ part . They do n’t have malevolence , and the concepts of lawful and false are meaningless to them . They ’ve simply learned to tie in certain wrangle or phrase with sure concepts , even if those associations are n’t accurate .

Hence Gemini ’s and Copilot ’s Super Bowl 2024 ( and 2023 , for that matter ) falsehoods .

Google and Microsoft , like most GenAI vendors , readily receipt that their GenAI apps are n’t perfect and are , in fact , prone to making mistake . But these acknowledgements come in the form of small photographic print I ’d argue could easily be missed .

Super Bowl disinformation for certain is n’t the most harmful exercise of GenAI go off the rails . That distinction probably consist withendorsingtorture , reinforcingethnic and racial stereotypes orwriting convincinglyabout confederacy theory . It is , however , a utilitarian reminder to double - curb assertion from GenAI bot . There ’s a decent chance they ’re not true .