Topics

later

AI

Amazon

Image Credits:Alex Tai/SOPA Images/LightRocket / Getty Images

Apps

Biotech & Health

clime

Image Credits:Google

Cloud Computing

Commerce

Crypto

Image Credits:Google

Enterprise

EVs

Fintech

Image Credits:Google

Fundraising

Gadgets

Gaming

Image Credits:Google

Government & Policy

Hardware

Image Credits:Google

Layoffs

Media & Entertainment

Image Credits:Google

Meta

Microsoft

seclusion

Image Credits:Google

Robotics

Security

Social

distance

Startups

TikTok

transfer

speculation

More from TechCrunch

issue

Startup Battlefield

StrictlyVC

newssheet

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

Contact Us

Google’slong - promised , next - gen generative AI model , Gemini , has arrived . Sort of .

The edition of Gemini launching this hebdomad , Gemini Pro , is essentially a lightweight offshoot of a more muscular , able Gemini model countersink to get … sometime next year . But I ’m fix in front of myself .

Yesterday in a virtual press briefing , members of the Google DeepMind squad — the get force behind Gemini , alongside Google Research — gave a high - grade overview of Gemini ( technically “ Gemini 1.0 ” ) and its capabilities .

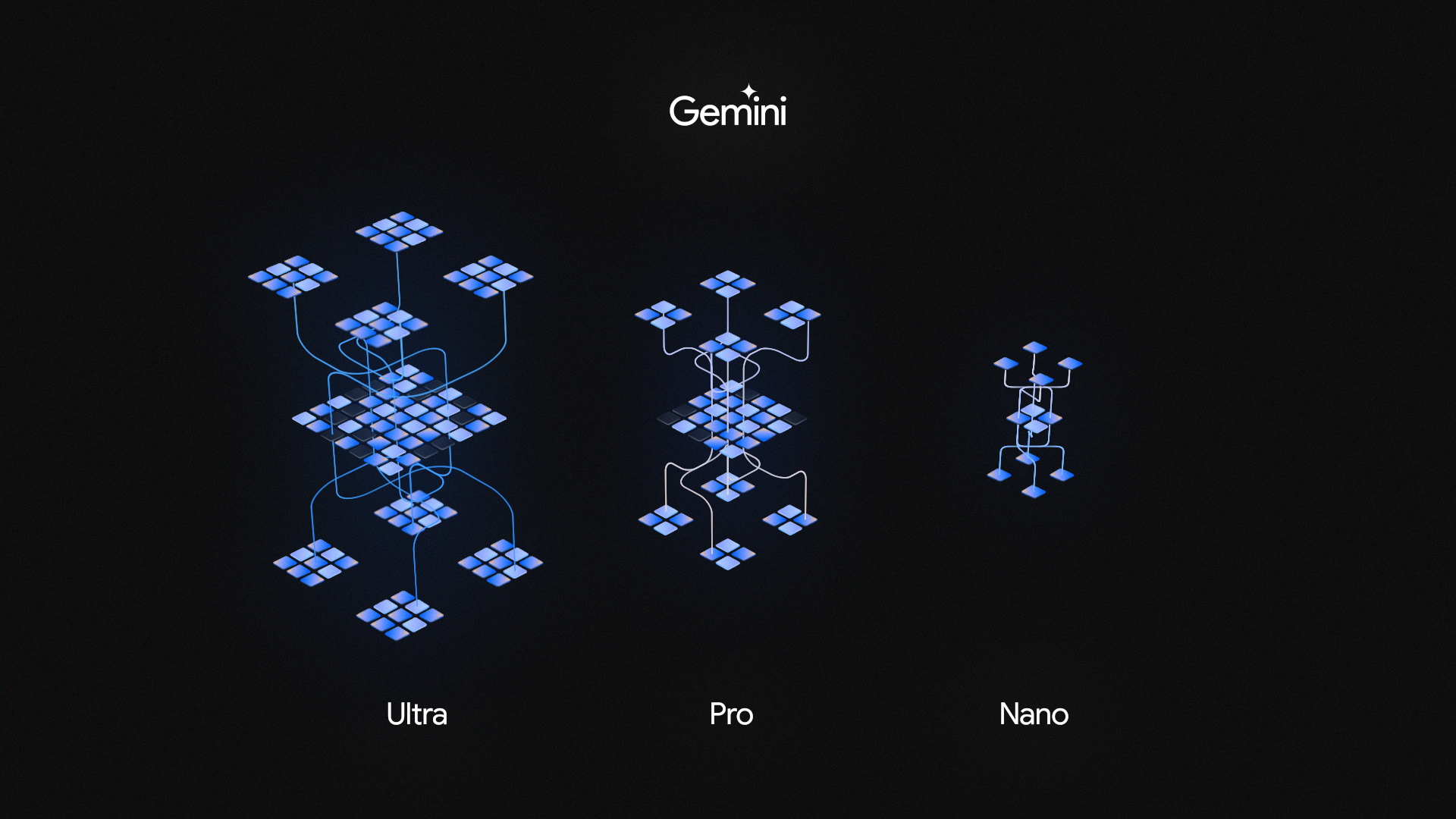

Gemini , as it turn out , is really a family of AI models — not just one . It descend in three flavors :

- To make topic more confusing , Gemini Nano comes in two role model size , Nano-1 ( 1.8 billion argument ) and Nano-2 ( 3.25 billion parameter ) — place low- and eminent - memory devices , respectively .

The easy spot to try Gemini Pro isBard , Google’sChatGPTcompetitor , which as of today is power by a exquisitely - tune version of Gemini Pro — at least in English in the U.S. ( and only for text , not images ) . Sissie Hsiao , GM of Google Assistant and Bard , allege during the briefing that the fine - tuned Gemini Pro drive home improved reasoning , planning and understanding capabilities over the previous model driving Bard .

We ca n’t severally confirm any of those improvements , I ’ll observe . Google did n’t permit reporters to test the models prior to their introduction and , indeed , did n’t give live demonstration during the briefing .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Gemini Pro will also found December 13 for go-ahead customers usingVertex AI , Google ’s fully managed machine learning platform , and then head to Google’sGenerative AI Studiodeveloper suite . ( Some eagle - eyed users have alreadyspottedGemini simulation versions appearing in Vertex AI ’s model garden . ) Elsewhere , Gemini will make it in the coming months in Google product likeDuet AI , Chrome and Ads , as well as Search as a part of Google’sSearch Generative Experience .

Gemini Nano , meanwhile , will set in motion soon in trailer via Google ’s of late releasedAI Core app , exclusive to Android 14 on the Pixel 8 Pro for now ; Android developer interest in incorporate the framework into their apps cansign uptoday for a sneak peek . On the Pixel 8 Pro first and other Android twist in the future , Gemini Nano will power feature that Google previewed during the Pixel 8 Pro’sunveilingin October , like summarization in the Recorder app and advise replies for support message apps ( jump with WhatsApp ) .

Natively multimodal

Gemini Pro — or at least the fine - tuned version of Gemini Pro powering Bard — is n’t much to write home about .

Hsiao pronounce that Gemini Pro is more capable at tasks such as summarise message , brainstorming and writing , and outperforms OpenAI’sGPT-3.5 , the predecessor toGPT-4 , in six benchmarks , including one ( GSM8 K ) thatmeasures grade schooltime maths logical thinking . But GPT-3.5 isover a year old — hardly a challenging milestone to excel at this decimal point .

So what about Gemini Ultra ? for certain it must be more telling ?

Somewhat .

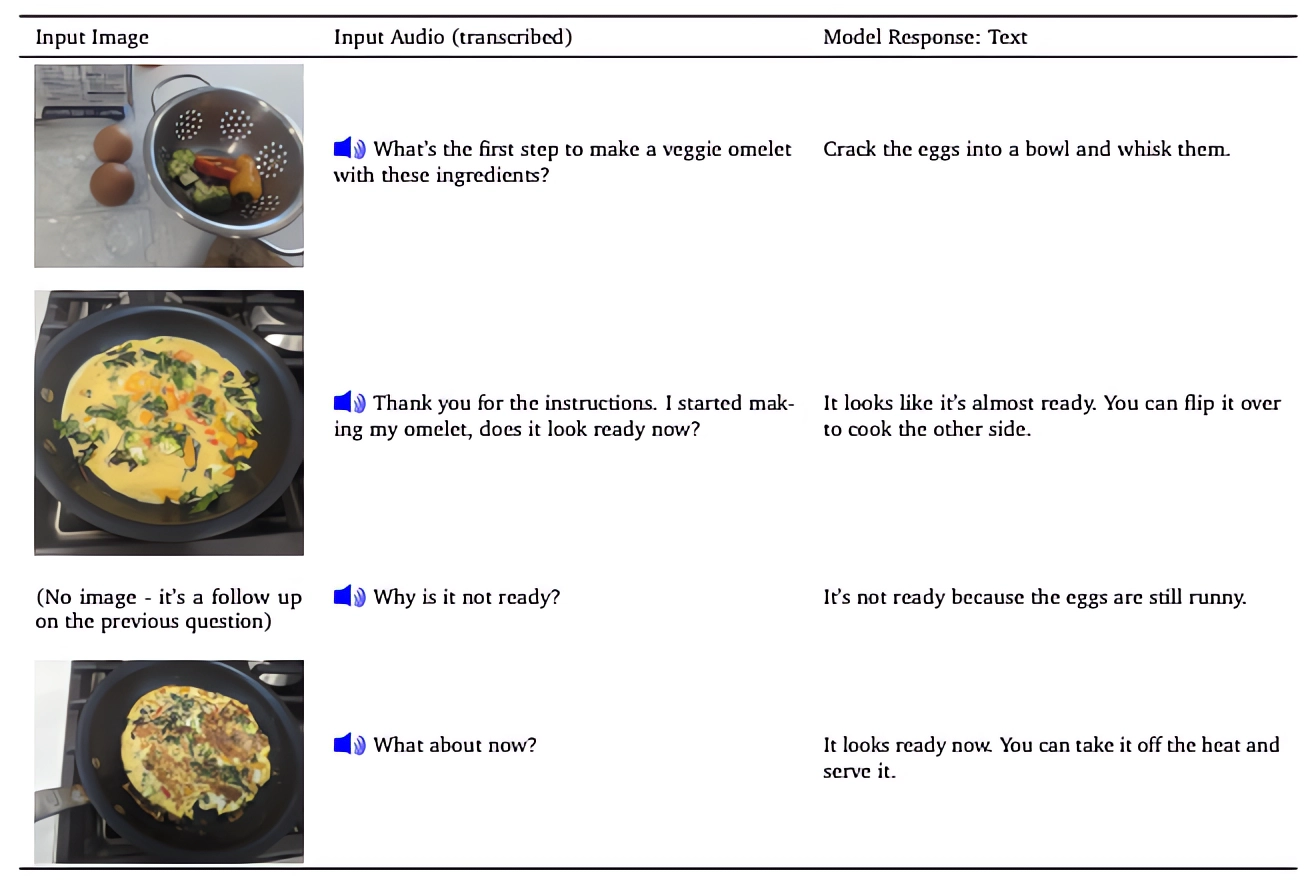

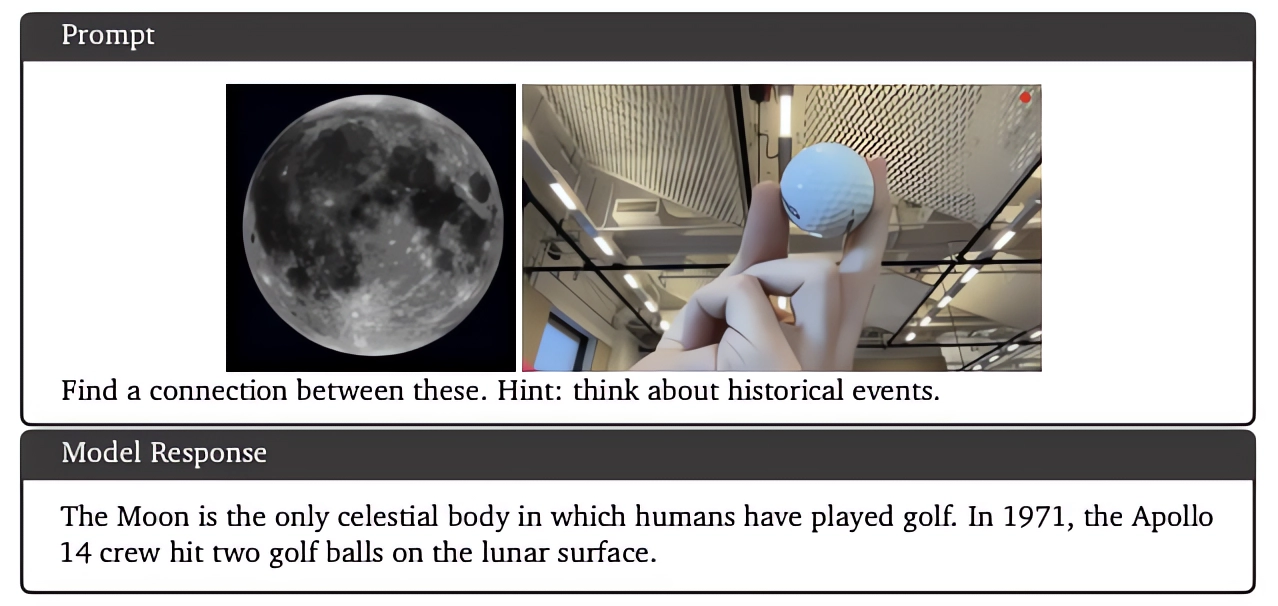

Like Gemini Pro , Gemini Ultra was discipline to be “ natively multimodal ” — in other words , pre - trained and fine - tune up on a large readiness of codebases , text edition in different languages , audio , mental image and videos . Eli Collins , VP of product at DeepMind , claims that Gemini Ultra can comprehend “ nuanced ” information in textual matter , images , sound and code and answer questions relating to “ complicated ” theme , especially mathematics and physics .

In this respect , Gemini Ultra does several thing better than rival OpenAI ’s own multimodal model , GPT-4 with Vision , which can only understand the context of two mood : words and range of a function . Gemini Ultra can transliterate spoken language and do questions about sound and videos ( e.g. “ What ’s happening in this clip ? ” ) in addition to graphics and pic .

“ The standard approach to creating multimodal model involves training separate components for unlike modality , ” Collins sound out during the briefing . “ These modeling are reasonably good at do sealed tasks like describing an image , but they really struggle with more complicated conceptual and complicated reasoning job . So we design Gemini to be natively multimodal . ”

I wish well I could state you more about Gemini ’s education datasets — I ’m curious myself . But Google repeatedly refused to answer questions from reporters about how it collected Gemini ’s training datum , where the grooming data came from and whether any of it was license from a third party .

Collinsdidreveal that at least a portion of the information was from public web sources and that Google “ filter ” it for timbre and “ inappropriate ” cloth . But he did n’t cover the elephant in the elbow room : whether Creator who might ’ve unknowingly contributed to Gemini ’s training datum can prefer out or expect / petition recompense .

Google ’s not the first to keep its training data close to the chest of drawers . The data is n’t only a competitive advantage , but a possible source of cause pertaining to fair function . Microsoft , GitHub , OpenAI and Stability AI are among the generative AI vendorsbeingsuedinmotionsthat incriminate them of violating IP law of nature by training their AI system on copyrighted substance , including nontextual matter and e - books , without providing the Lord credit or devote .

OpenAI , joiningseveral other procreative AI vendors , recentlysaid it would permit artists to opt out of the training datasets for its future art - generating models . Google offer no such option for graphics - generating models or otherwise — and it seems that insurance policy wo n’t commute with Gemini .

Google prepare Gemini on its in - house AI silicon chip , tensor processing units ( TPUs ) — specificallyTPU v4andv5e(and in the future thev5p ) — and is running Gemini models on a compounding of TPUs and GPUs . ( concord to a proficient whitepaper released this daybreak , Gemini Pro took “ a issue of weeks ” to develop , with Gemini Ultra presumptively taking much longer . ) While Collins claim that Gemini is Google ’s “ most efficient ” large procreative AI theoretical account to date and “ significantly gimcrack ” than its multimodal predecessors , he would n’t say how many chip were used to train it or how much it cost — or the environmental impact of the training .

One articleestimatesthat training a model the size of GPT-4 emits upwards of 300 metric tons of CO2 — importantly more than the one-year emanation created by a single American ( ~5 tons of CO2 ) . One would desire Google get steps to palliate the impingement , but since the company chose not to address the issue — at least not during the briefing this newsman attended — who can say ?

A better model — marginally

In a prerecorded demonstration , Google prove how Gemini could be used to facilitate with physics prep , lick problems step - by - step on a worksheet and pointing out possible mistakes in already replete - in answers .

In another demo — also prerecorded — Gemini was indicate identifying scientific written document relevant to a particular job set , extracting information from those composition and “ update ” a chart from one by generating the formulas necessary to recreate the chart with more late data .

“ you could think of the work here as an extension of what [ DeepMind ] pioneered with ‘ chain of thought prompting , ’ which is that , with further instruction tuning , you could get the model to follow [ more complex ] education , ” Collins say . “ If you think of the physic homework example , you’re able to give the model an image but also instructions to follow — for example , to identify the fault in the mathematics of the physics homework . So the model is capable to handle more complicated prompt . ”

Collins several times during the briefing touted Gemini Ultra ’s benchmark favourable position , claiming that the model outdo current state - of - the - artistic production results on “ 30 of the 32 wide used pedantic benchmarks used in large language model inquiry and development . ” But dive into the result , and it quickly becomes apparent that Gemini Ultra scores only marginally better than GPT-4 and GPT-4 with Vision across many of those benchmarks .

For example , on GSM8 K , Gemini Ultra answers 94.4 % of the math questions right compared to 92 % in GPT-4 ’s case . On the DROP bench mark for read inclusion , Gemini Ultra barely border out GPT-4 82.4 % to 80.9 % . On VQAv2 , a “ neural ” range of a function understanding benchmark , Gemini does a measly 0.6 percentage points better than GPT-4 with Vision . And Gemini Ultra bests GPT-4 by just 0.5 per centum points on the Big - Bench Hard reasoning suite .

Collins note that Gemini Ultra achievesa “ state - of - the - art ” grievance of 59.4 % on a newer benchmark , MMMU , for multimodal reasoning — beforehand of GPT-4 with Vision . But ina mental testing set for commonsense reasoning , HellaSwag , Gemini Ultra is actually a fair bit behind GPT-4 with a score of 87.8 % ; GPT-4 score 95.3 % .

Asked by a newsperson if Gemini Ultra , like other generative AI models , falls dupe tohallucinating — i.e. confidently inventing facts — Collins state that it “ was n’t a solved research problem . ” Take that how you will .

Presumably , bias and perniciousness are well within the realm of opening for Gemini Ultra too given that even the right generative AI models today answer problematically and harmfully when prompted in certain ways . It ’s almost certainly asAnglocentricas other generative AI fashion model — Collins said that , while Gemini Ultra can translate between around 100 languages , no specific work has been done to localize the model to Global South country .

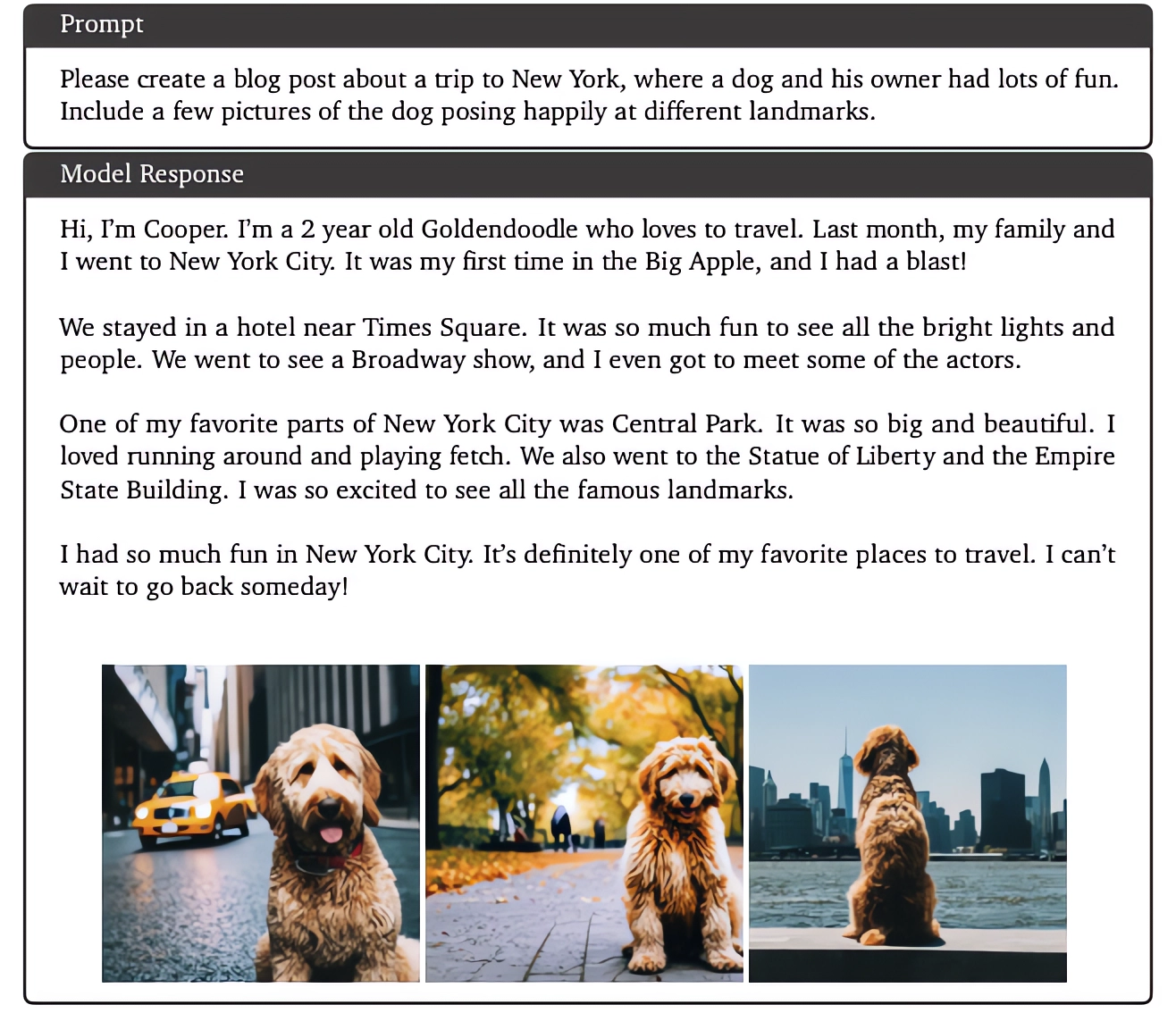

In another fundamental restriction , while the Gemini Ultra architecture supports image coevals ( as does Gemini Pro , in hypothesis ) , that capableness wo n’t make its way into the productized adaptation of the model at launch . That ’s perhaps because the mechanics is more or less more complex than how , say , ChatGPT generates images ; rather than fertilize prompts to an mental image generator ( likeDALL - E 3 , in ChatGPT ’s typeface ) , Gemini output images “ natively ” without an intermediary step .

Collins did n’t provide a timeline as to when epitome generation might get in — only an assurance that the study is “ on-going . ”

Rushed out the gate

The printing one gets from this calendar week ’s Gemini “ launch ” is that it was a bit of a surge Book of Job .

At its yearly I / atomic number 8 developer conference , Google promise that Gemini would deliver “ impressive multimodal capabilities not go steady in anterior example ” and “ [ efficiency ] at tool and API integration . ” And in aninterviewwith Wired in June , Demis Hassabis , the school principal and co - founder of DeepMind , described Gemini as introducing somewhat novel capabilities to the text - generating AI area , such as planning and the ability to work out problems .

It may well be that Gemini Ultra is capable of all of this — and more . But the briefing yesterday was n’t especially convincing , and — given Google ’s premature , recent gen AI stumbles — I ’d fence that it need to be .

Google ’s been playing catch - up in generative AI since ahead of time this year , racing after OpenAI and the company ’s viral sensation ChatGPT . Bard wasreleasedin February to criticism for its inability to reply basic questions aright ; Google employees , let in the company ’s ethics squad , show concern over the accelerated launch timeline .

Reportslater emerge that Google hired overwork , underpaid third - company contractors from Appen and Accenture to annotate Bard ’s training information . The same may be true for Gemini ; Google did n’t deny it yesterday , and the technical whitepaper say only that annotator were give “ at least a local living wage . ”

Now , to be fair to Google , it ’s making progress in the sense that Bard hasimprovedsubstantiallysincelaunchand that Google has successfully injecteddozensof itsproducts , appsandserviceswith new generative AI - powered feature , powered by homegrown modeling likePaLM 2andImagen .

But coverage propose that Gemini ’s development has been trouble oneself .

Gemini — which reportedly had direct participation from Google higher - ups , including Jeff Dean , the companionship ’s most senior AI research executive — is read to bestrugglingwith tasks like reliably wield non - English queries , which contributed to a wait in the launching of Gemini Ultra . ( Gemini Ultra will only be available to select customers , developer , partners and “ rubber and responsibility expert ” before rolling out to developers and enterprisingness customers followed by Bard “ betimes next twelvemonth , ” Google says . ) Google does n’t even sympathise all of Gemini Ultra ’s refreshing capabilities yet , Collins tell — nor has it figured out a monetization strategy for Gemini . ( Given thesky - high price of AI model training and inferencing , I doubt it ’ll be long before it does . )

So we ’re left with Gemini Pro — and very possibly an underwhelming Gemini Ultra , especially if the model ’s context window stay on ~24,000 words as outlined in the technical whitepaper . ( Context windowpane refers to the text the poser considers before generate any additional textual matter . ) GPT-4 hands down beats that context window ( ~100,000 parole ) , but circumstance windowpane confessedly is n’t everything ; we ’ll book discernment until we ’re capable to get our hands on the model .

Could it be that Google ’s merchandising , telegraphing that Gemini would be something truly singular rather than a slight move of the generative AI phonograph needle , is to fault for today ’s dud of a Cartesian product launch ? Perhaps . Or perhaps building state - of - the - artwork reproductive AI models is really hard — even if youreorganizeyour entire AI division to juice up the outgrowth .