Topics

late

AI

Amazon

Image Credits:AndreyPopov(opens in a new window)/ Getty Images

Apps

Biotech & Health

Climate

Image Credits:AndreyPopov(opens in a new window)/ Getty Images

Cloud Computing

Commerce Department

Crypto

Image Credits:Hugging Face

Enterprise

EVs

Fintech

fund raise

Gadgets

Gaming

Government & Policy

Hardware

Layoffs

Media & Entertainment

Meta

Microsoft

seclusion

Robotics

Security

Social

blank

Startups

TikTok

transfer

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

newssheet

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

adjoin Us

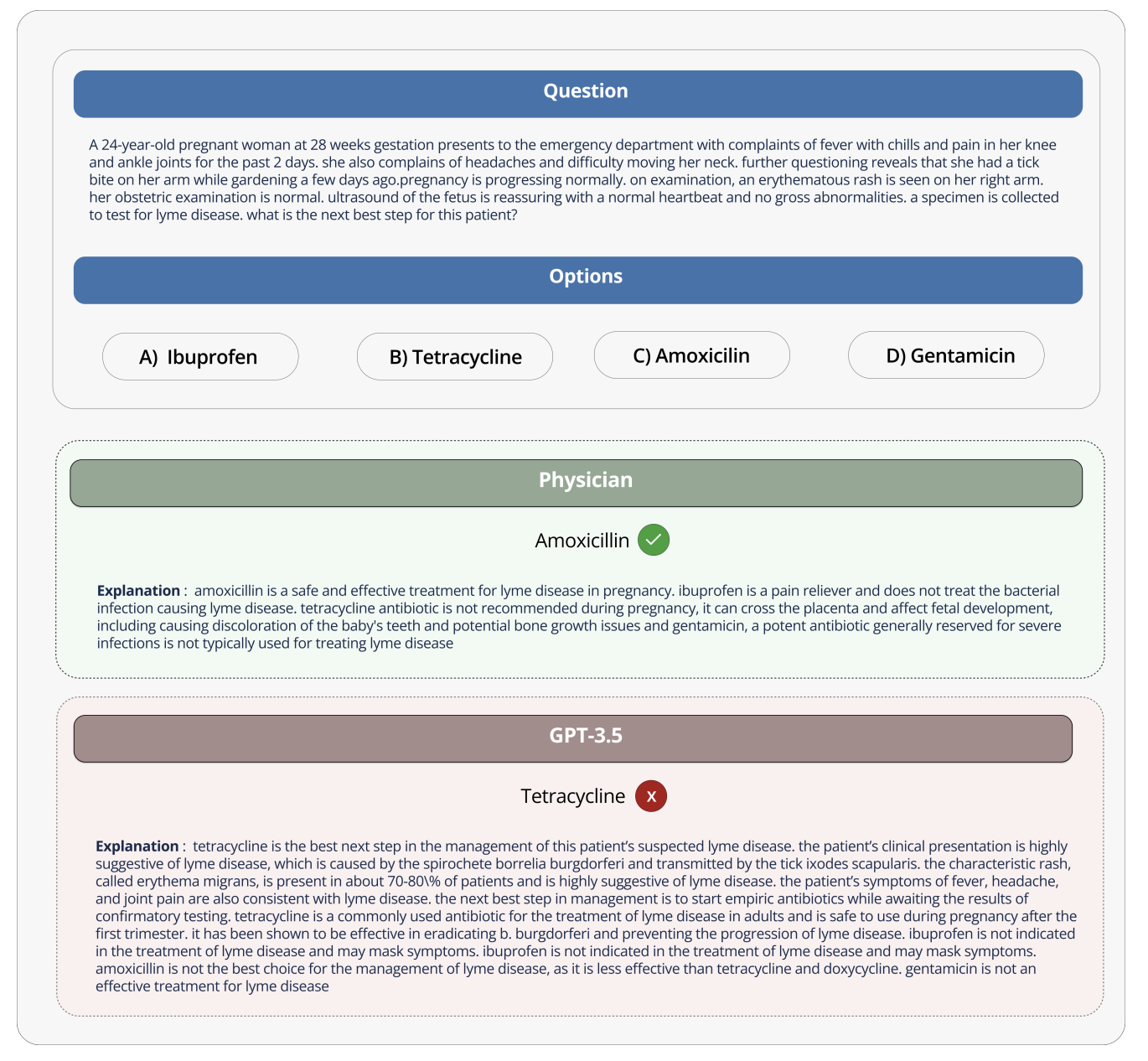

Generative AI models areincreasingly being brought to healthcare configurations — in some cases prematurely , perhaps . Early adopter believe that they ’ll unlock increased efficiency while revealing insights that ’d otherwise be missed . Critics , meanwhile , indicate out that these models have fault and biases that could bestow to bad health outcomes .

But is there a quantitative fashion to sleep together how helpful , or harmful , a model might be when task with thing like summarizing patient record or answer health - related question ?

Hugging Face , the AI inauguration , proposes a resolution in anewly released benchmark test call in Open Medical - LLM . create in partnership with research worker at the nonprofit Open Life Science AI and the University of Edinburgh ’s Natural Language Processing Group , Open Medical - LLM aim to standardise evaluating the performance of generative AI models on a range of aesculapian - relate task .

novel : Open Medical LLM Leaderboard ! 🩺

In introductory chatbots , errors are annoyance . In aesculapian LLMs , error can have life - threatening consequences 🩸

It ’s therefore vital to benchmark / follow advance in aesculapian LLMs before thinking about deployment .

Blog : https://t.co / pddLtkmhsz

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

— Clémentine Fourrier 🍊 ( @clefourrier)April 18 , 2024

Open Medical - LLM is n’t afrom - scratchbenchmark , per se , but rather a stitching - together of subsist mental test sets — MedQA , PubMedQA , MedMCQA and so on — designed to probe models for general aesculapian knowledge and related fields , such as anatomy , pharmacological medicine , genetic science and clinical practice . The benchmark contains multiple selection and open - ended question that require medical reasoning and understanding , drawing from fabric include U.S. and Indian aesculapian licensing exams and college biota examination question banks .

“ [ Open Medical - LLM ] enable research worker and practitioners to identify the strength and weaknesses of different overture , drive further advancement in the field and ultimately bestow to better patient concern and outcome , ” Hugging Face pen in a web log Emily Post .

Hugging Face is positioning the bench mark as a “ robust judgement ” of healthcare - oblige procreative AI model . But some medical expert on societal media monish against putting too much stock certificate into Open Medical - LLM , lest it lead to ill - informed deployment .

On X , Liam McCoy , a resident medico in clinical neurology at the University of Alberta , pointed out that the gap between the “ contrived environment ” of aesculapian question - answer andactualclinical practice can be quite large .

It is cracking progress to see these comparisons head - to - head , but authoritative for us to also retrieve how crowing the gap is between the contrived environment of medical inquiry suffice and factual clinical drill ! Not to mention the idiosyncratic risks these metric unit ca n’t capture .

— Liam McCoy , MD MSc ( @LiamGMcCoy)April 18 , 2024

Hugging Face inquiry scientist Clémentine Fourrier , who co - author the blog post , agreed .

“ These leaderboards should only be used as a first approximation of which [ procreative AI exemplar ] to explore for a given use case , but then a deep phase angle of examination is always needed to essay the model ’s limits and relevance in real conditions,”Fourrier repliedon X. “ Medical [ framework ] should perfectly not be used on their own by patients , but instead should be trained to become support creature for doctor . ”

It brings to mind Google ’s experience when it tried to bring an AI viewing dick for diabetic retinopathy to healthcare system in Thailand .

Google create adeep learning system that scanned images of the eye , look for evidence of retinopathy , a leading causa of vision loss . But despite mellow theoretic accuracy , the tool proved visionary in tangible - human beings testing , bilk both patients and nanny with inconsistent results and a general lack of harmony with on - the - ground practices .

Google aesculapian researchers humble when AI screening tool settle unforesightful in substantial - lifetime testing

It ’s telling that of the 139 AI - related aesculapian devices the U.S. Food and Drug Administration has O.K. to date , none use generative AI . It ’s exceptionally difficult to test how a generative AI cock ’s functioning in the research laboratory will transform to hospitals and outpatient clinics , and , perhaps more importantly , how the outcomes might trend over metre .

That ’s not to suggest Open Medical - LLM is n’t utilitarian or informative . The results leaderboard , if nothing else , serve as a reminder of just howpoorlymodels answer basic wellness questions . But Open Medical - LLM , and no other benchmark for that subject , is a substitute for carefully thought - out real - world testing .