Topics

belated

AI

Amazon

Image Credits:hapabapa / Getty Images

Apps

Biotech & Health

clime

Image Credits:hapabapa / Getty Images

Cloud Computing

Commerce

Crypto

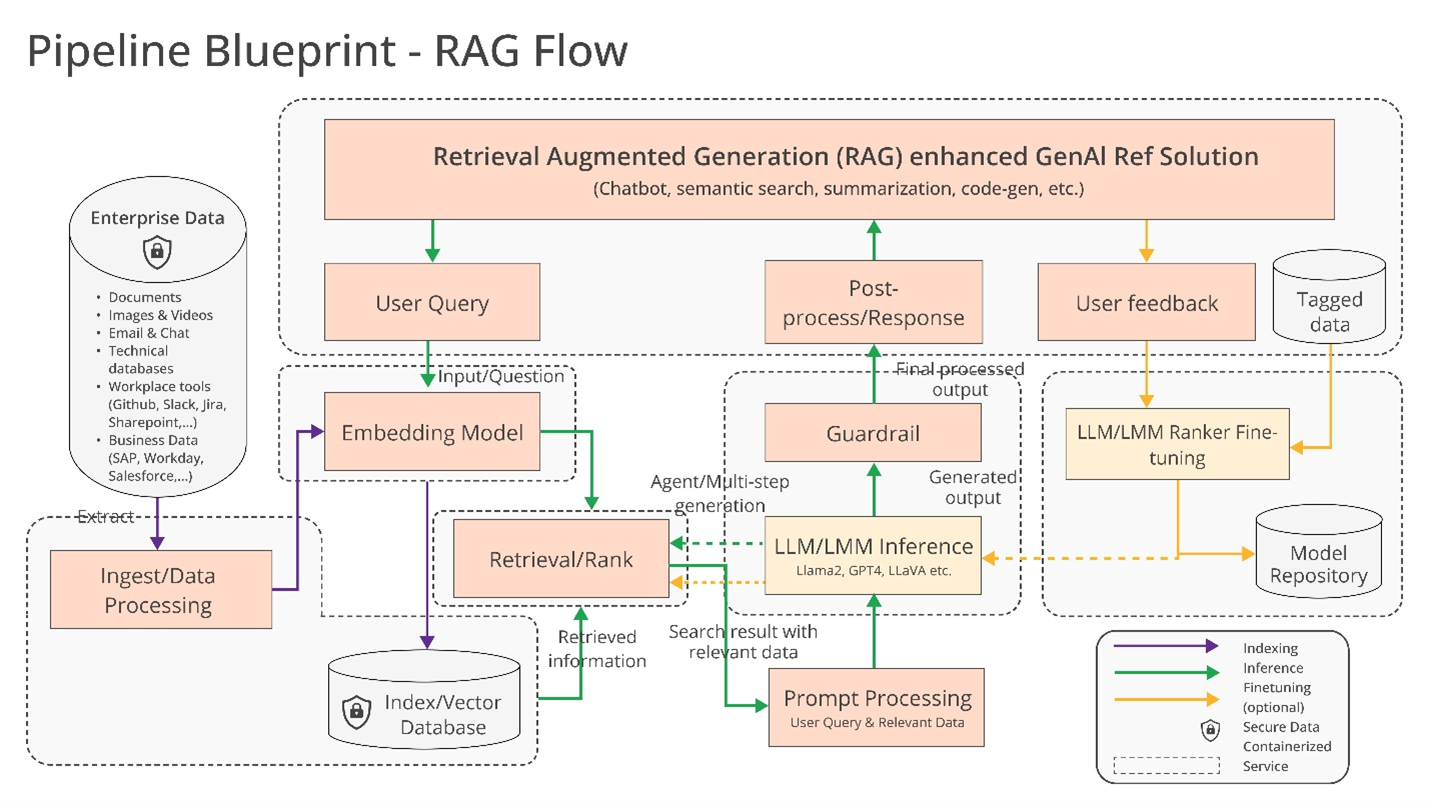

A diagram explaining RAG models.Image Credits:Intel

endeavour

EVs

Fintech

Fundraising

Gadgets

gage

Government & Policy

Hardware

Layoffs

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

Security

Social

Space

Startups

TikTok

Department of Transportation

speculation

More from TechCrunch

outcome

Startup Battlefield

StrictlyVC

newssheet

Podcasts

TV

Partner Content

TechCrunch Brand Studio

Crunchboard

Contact Us

Can generative AI designed for the endeavour ( for example , AI that autocompletes reports , spreadsheet formulas and so on ) ever be interoperable ? Along with a coterie of organizations include Cloudera and Intel , the Linux Foundation — the nonprofit arrangement that supports and maintains a develop number of open rootage exertion — aims to find out .

The Linux Foundation on Tuesdayannouncedthe launching of the Open Platform for Enterprise AI ( OPEA ) , a project to further the development of open , multi - supplier and composable ( i.e. modular ) generative AI systems . Under the purview of the Linux Foundation ’s LF AI and Data org , which focuses on AI- and data - related platform go-ahead , OPEA ’s finish will be to pave the agency for the discharge of “ indurate , ” “ scalable ” generative AI organization that “ rein in the best opened source founding from across the ecosystem , ” LF AI and Data ’s executive music director , Ibrahim Haddad , pronounce in a military press release .

“ OPEA will unlock new possibilities in AI by creating a elaborated , composable fabric that resist at the head of technology stacks , ” Haddad said . “ This initiative is a testament to our mission to ride open informant innovation and collaboration within the AI and data communities under a neutral and open governance exemplar . ”

In addition to Cloudera and Intel , OPEA — one of the Linux Foundation ’s Sandbox Projects , an brooder programme of sort — numeration among its member go-ahead heavyweight like Intel , IBM - have Red Hat , Hugging Face , Domino Data Lab , MariaDB and VMware .

So what might they build together precisely ? Haddad suggest at a few possibilities , such as “ optimized ” support for AI toolchains and compilers , which enable AI workloads to move across different ironware component part , as well as “ heterogenous ” pipelines for retrieval - augment generation ( RAG ) .

RAG is becoming increasingly popular in enterprise applications of procreative AI , and it ’s not difficult to see why . Most generative AI models ’ answers and actions are limited to the data on which they ’re trained . But with RAG , a model ’s cognition base can be put out to info outside the original training data . RAG mannequin reference this outside info — which can take the form of proprietary companionship data , a public database or some combination of the two — before generating a response or performing a project .

Intel offered a few more detail in its ownpress liberation :

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Enterprises are challenged with a do - it - yourself feeler [ to RAG ] because there are no de facto measure across components that admit enterprise to prefer and deploy RAG solutions that are open and interoperable and that help them speedily get to market . OPEA intends to address these exit by join forces with the industry to standardize ingredient , including frameworks , computer architecture blueprint and citation solvent .

Evaluation will also be a primal part of what OPEA tackles .

In its GitHubrepository , OPEA offer a rubric for grading procreative AI systems along four axe : public presentation , feature film , trustiness and “ enterprise - grade ” facility . Performanceas OPEA defines it pertains to “ black - loge ” benchmarks from material - earth use cases . Featuresis an appraisal of a system ’s interoperability , deployment pick and relaxation of use . Trustworthinesslooks at an AI model ’s ability to secure “ robustness ” and quality . Andenterprise readinessfocuses on the requirement to get a system up and running sans major issues .

Rachel Roumeliotis , managing director of overt germ strategy at Intel , saysthat OPEA will work with the open source residential district to offer test based on the statute title , as well as provide assessments and grading of productive AI deployments on request .

OPEA ’s other endeavors are a fleck up in the air at the moment . But Haddad floated the potential drop of opened mannequin maturation along the parentage of Meta’sexpanding Llama familyand Databricks’DBRX . Toward that end , in the OPEA repo , Intel has already bestow reference book implementations for a generative - artificial intelligence - powered chatbot , text file summarizer and computer code generator optimized for its Xeon 6 and Gaudi 2 hardware .

Now , OPEA ’s members are very understandably invested ( and self - interested , for that matter ) in construction tooling for enterprise reproductive AI . Cloudera recentlylaunched partnershipsto make what it ’s pitching as an “ AI ecosystem ” in the cloud . Domino offers asuite of appsfor construction and auditing business - advancing generative AI . And VMware — orient toward the infrastructure side of endeavour AI — last August wheel outnew “ private AI ” compute product .

The enquiry is whether these vender willactuallywork together to build bad-tempered - compatible AI creature under OPEA .

There ’s an obvious benefit to doing so . Customers will mirthfully get out on multiple vendors depending on their motive , resource and budgets . But story has show that it ’s all too well-off to become disposed toward seller ringlet - in . get ’s hope that ’s not the ultimate outcome here .