Topics

Latest

AI

Amazon

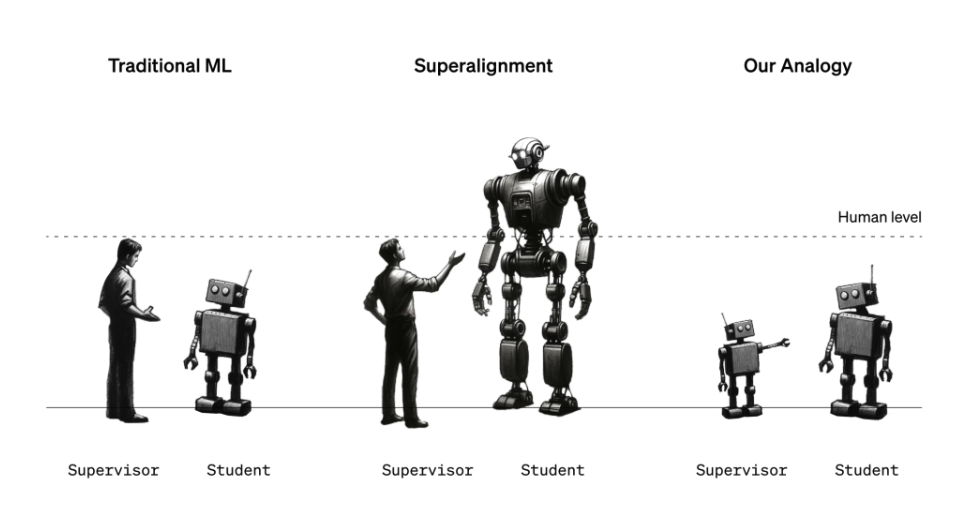

A figure illustrating the Superalignment team’s AI-based analogy for aligning superintelligent systems.Image Credits:OpenAIImage Credits:OpenAI

Apps

Biotech & Health

Climate

A figure illustrating the Superalignment team’s AI-based analogy for aligning superintelligent systems.Image Credits:OpenAIImage Credits:OpenAI

Cloud Computing

mercantilism

Crypto

go-ahead

EVs

Fintech

Fundraising

Gadgets

Gaming

Government & Policy

Hardware

Layoffs

Media & Entertainment

Meta

Microsoft

seclusion

Robotics

Security

Social

infinite

startup

TikTok

Transportation

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

get through Us

While investors werepreparing to go nuclearafter Sam Altman’sunceremonious ousterfrom OpenAI and Altman wasplotting his returnto the society , the penis of OpenAI’sSuperalignmentteam were assiduously plugging along on the trouble of how to master AI that ’s smarter than human being .

Or at least , that ’s the impression they ’d like to give .

This hebdomad , I took a call with three of the Superalignment team ’s penis — Collin Burns , Pavel Izmailov and Leopold Aschenbrenner — who were in New Orleans at NeurIPS , the annual machine learning conference , to represent OpenAI ’s newest work on insure that AI system comport as intended .

OpenAIformedthe Superalignment team in July to produce ways to direct , regulate and govern “ superintelligent ” AI systems — that is , theoretical system with intelligence far exceed that of human .

“ Today , we can essentially ordinate model that are dumb than us , or maybe around human - level at most , ” Burns said . “ Aligning a modelling that ’s actually chic than us is much , much less obvious — how we can even do it ? ”

The Superalignment endeavour is being led by OpenAI co - founder and primary scientist Ilya Sutskever , which did n’t raise eyebrows in July — but surely does now in luminosity of the fact that Sutskeverwas among thosewho initially pushed for Altman ’s dismission . While somereportingsuggests Sutskever is in a “ United States Department of State of oblivion ” following Altman ’s return , OpenAI ’s PR tells me that Sutskever is indeed — as of today , at least — still heading the Superalignment team .

Superalignment is a minute of a ticklish case within the AI research community . Some contend that the subfield is premature ; others imply that it ’s a red Clupea harangus .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

While Altman has call for comparisons between OpenAI and the Manhattan Project , going so far as to assemble ateamto probe AI models to protect against “ ruinous risks , ” including chemical and atomic threat , some expert say that there ’s little evidence to propose the startup ’s technology will take in world - finish , human - outsmarting capacity anytime soon — or ever . claim of imminent superintelligence , these expert add , service only to on purpose draw in attention by from and distract from the pressing AI regulatory issue of the day , like algorithmic bias and AI ’s trend toward toxicity .

For what it ’s deserving , Sutskever appear to believeearnestlythat AI — not OpenAI ’s per se , butsome embodimentof it — could someday pose an existential menace . He reportedly went so far as tocommission and burna wooden image at a ship’s company offsite to demonstrate his commitment to preventing AI hurt from befalling humanity , and commands a meaningful amount of OpenAI ’s compute — 20 % of its be computer chips — for the Superalignment team ’s research .

“ AI progress recently has been extraordinarily rapid , and I can assure you that it ’s not slow down , ” Aschenbrenner say . “ I think we ’re belong to reach human - stage system pretty presently , but it wo n’t stop there — we ’re fit to go right on through to superhuman system … So how do we array superhuman AI systems and make them safe ? It ’s really a problem for all of humanity — perhaps the most of import unsolved technical problem of our prison term . ”

The Superalignment team , currently , is attempting to build governance and dominance frameworks thatmightapply well to succeeding powerful AI system . It ’s not a straightforward undertaking , count that the definition of “ superintelligence ” — and whether a particular AI system has achieved it — is the subject area of robust debate . But the approaching the squad ’s settle on for now involves using a faint , less - advanced AI model ( e.g. GPT-2 ) to draw a more in advance , sophisticated model ( GPT-4 ) in desirable direction — and away from unsuitable ones .

“ A mountain of what we ’re trying to do is tell a model what to do and insure it will do it , ” Burns said . “ How do we get a model to accompany instruction and get a model to only help with things that are true and not make poppycock up ? How do we get a fashion model to tell us if the code it give is safe or egregious behavior ? These are the types of chore we require to be able to achieve with our enquiry . ”

But wait , you might say — what does AI guiding AI have to do with forbid humanity - baleful AI ? Well , it ’s an doctrine of analogy : The weak model is signify to be a stand - in for human supervisors while the warm model represent superintelligent AI . Similar to humans who might not be capable to make sensory faculty of a superintelligent AI arrangement , the weak good example ca n’t “ empathize ” all the complexity and refinement of the strong framework — make the setup utilitarian for proving out superalignment hypotheses , the Superalignment team says .

“ you’re able to think of a 6th - mark student trying to supervise a college educatee , ” Izmailov explained . “ allow ’s say the 6th grader is trying to tell the college student about a task that he kind of have it off how to figure out … Even though the supervision from the 6th grader can have mistake in the details , there ’s promise that the college scholar would understand the gist and would be able to do the task better than the supervisory program . ”

In the Superalignment team ’s setup , a weak model fine - tune on a particular undertaking generates labels that are used to “ commune ” the panoptic strokes of that task to the strong model . Given these label , the solid model can infer more or less correctly harmonize to the weak model ’s design — even if the weak modeling ’s label turn back errors and biases , the team retrieve .

The weak - strong model approach might even lead to breakthroughs in the area of hallucinations , claims the squad .

“ Hallucinations are really quite interesting , because internally , the theoretical account in reality know whether the thing it ’s saying is fact or fiction , ” Aschenbrenner said . “ But the style these role model are trained today , human supervisory program pay back them ‘ thumbs up , ’ ‘ thumbs down ’ for saying thing . So sometimes , inadvertently , mankind reinforce the poser for saying things that are either false or that the model does n’t actually know about and so on . If we ’re successful in our research , we should acquire proficiency where we can basically summon the model ’s noesis and we could use that summon on whether something is fact or fabrication and use this to deoxidise hallucinations . ”

But the analogy is n’t perfect . So OpenAI wants to crowdsource idea .

To that end , OpenAI is plunge a $ 10 million grant program to patronize technical enquiry on superintelligent alliance , tranches of which will be reserve for academic labs , nonprofits , single researchers and graduate student . OpenAI also plan to also host an donnish conference on superalignment in early 2025 , where it ’ll share and push the superalignment award finalists ’ work .

inquisitively , a serving of financing for the subsidization will occur from former Google CEO and president Eric Schmidt . Schmidt — an warm supporter of Altman — is fast becoming a poster child for AI doomerism , asserting the arrival of dangerous AI systems is nigh and that regulators are n’t doing enough in preparation . It ’s not out of a sensory faculty of altruism , needs — report inProtocolandWirednote that Schmidt , an active AI investor , stands to benefit enormously commercially if the U.S. politics were to put through his nominate blueprint to bolster AI research .

The donation might be perceive as virtue signaling through a misanthropic lens , then . Schmidt ’s personal fortune stand up around an estimated $ 24 billion , and he ’s poured hundreds of millions into other , decidedlyless ethics - focusedAI venture andfunds — including his own .

Schmidt denies this is the fount , of course .

“ AI and other egress technologies are reshape our economy and society , ” he said in an emailed statement . “ secure they are coordinate with human values is decisive , and I am proud to support OpenAI ’s new [ grants ] to develop and hold in AI responsibly for public benefit . ”

Indeed , the involvement of a figure with such vaporous commercial-grade motivations begs the doubt : Will OpenAI ’s superalignment research as well as the enquiry it ’s encouraging the biotic community to submit to its future conference be made available for anyone to utilise as they see set ?

The Superalignment team assured me that , yes , both OpenAI ’s research — include code — and the work of others who receive grants and prizes from OpenAI on superalignment - related work will be shared publicly . We ’ll hold the troupe to it .

“ Contributing not just to the safety of our models but the base hit of other labs ’ models and ripe AI in worldwide is a part of our charge , ” Aschenbrenner suppose . “ It ’s really core to our mission of building [ AI ] for the benefit of all of humanity , safely . And we imagine that doing this research is perfectly essential for making it good and making it dependable . ”