Topics

Latest

AI

Amazon

Image Credits:PVML

Apps

Biotech & Health

Climate

Image Credits:PVML

Cloud Computing

Commerce

Crypto

Image Credits:PVML

Enterprise

EVs

Fintech

Image Credits:PVML

Fundraising

Gadgets

game

Government & Policy

computer hardware

Layoffs

Media & Entertainment

Meta

Microsoft

seclusion

Robotics

security measures

societal

Space

Startups

TikTok

conveyance

speculation

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

Contact Us

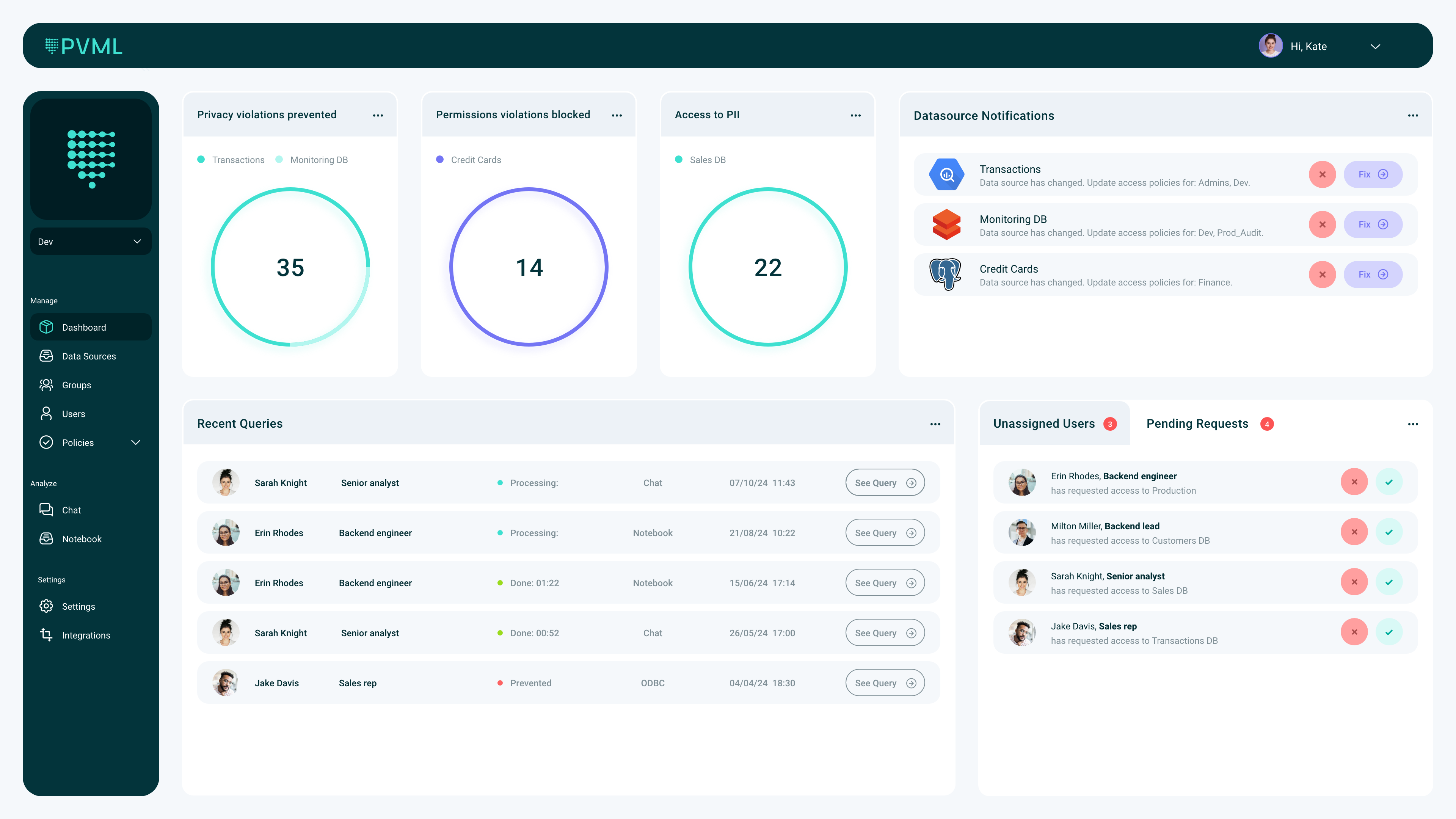

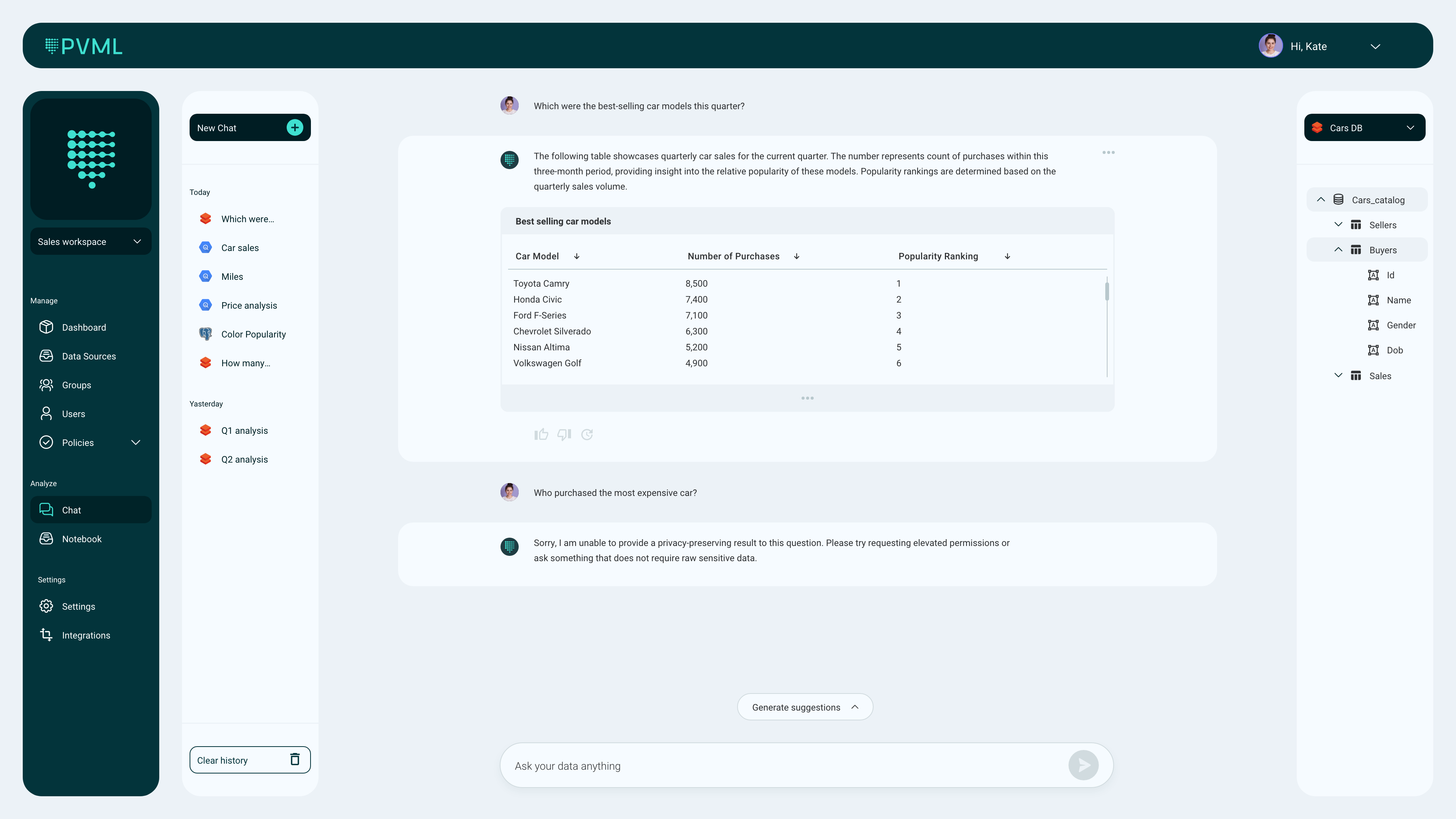

enterprisingness are hoarding more data than ever to fire their AI ambitiousness , but at the same time , they are also worried about who can access this information , which is often of a very secret nature . PVMLis extend an interesting solution by compound a ChatGPT - similar dick for analyse data point with the safety guarantee of differential privacy . Using recovery - augment generation ( RAG ) , PVML can get at a corporation ’s data without moving it , taking away another security condition .

The Tel Aviv - base troupe recently announced that it has raised an $ 8 million seed round guide by NFX , with involvement from FJ Labs and Gefen Capital .

The company was constitute by husband - and - wife teamShachar Schnapp(CEO ) andRina Galperin(CTO ) . Schnapp set about his doctor’s degree in information processing system scientific discipline , differentiate in differential privateness , and then worked on data processor vision at General Motors , while Galperin got her master ’s in computer science with a focal point on AI and natural language processing and do work on machine learning projects at Microsoft .

“ A peck of our experience in this area came from our employment in big corporates and bombastic companies where we saw that things are not as efficient as we were hoping for as naïve students , perhaps , ” Galperin said . “ The main note value that we want to bring brass as PVML is democratise data . This can only happen if you , on one hand , protect this very sensitive information , but , on the other hand , admit easy access to it , which today is synonymous with AI . Everybody desire to dissect data using free textbook . It ’s much prosperous , faster and more efficient — and our privy sauce , differential secrecy , enables this integration very easily . ”

Differential privacyis far from a raw concept . The core idea is to ensure the concealment of single exploiter in big datasets and provide mathematical guarantees for that . One of the most vulgar ways to accomplish this is to innovate a stage of haphazardness into the dataset , but in a fashion that does n’t alter the data analytic thinking .

The squad argues that today ’s data admittance solutions are ineffective and create a lot of overhead . Often , for example , a lot of data has to be removed in the operation of enabling employee to gain secure access to data — but that can be counterproductive because you may not be able-bodied to effectively utilise the redacted data for some tasks ( plus the additional lead clock time to get to the data point means material - time use cases are often impossible ) .

The promise of using differential privacy means that PVML ’s users do n’t have to make changes to the original data . This avoids almost all of the command processing overhead time and unlock this information safely for AI use event .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Virtually all thelargetechcompaniesnow use differential privacy in one form or another , and make their tools and libraries available to developers . The PVML squad reason that it has n’t really been put into practice yet by most of the data residential area .

“ The current noesis about differential privacy is more theoretical than pragmatic , ” Schnapp said . “ We decided to take it from theory to use . And that ’s precisely what we ’ve done : We develop hardheaded algorithms that work well on data in tangible - lifetime scenarios . ”

None of the differential privacy work would matter if PVML ’s actual information analysis peter and platform were n’t utilitarian . The most obvious use case here is the ability to chat with your data , all with the warrant that no raw data point can leak into the chat . Using RAG , PVML can bring delusion down to almost zero and the overhead is minimal since the data point stays in place .

But there are other use case , too . Schnapp and Galperin remark how differential privacy also allow party to now apportion datum between business units . In addition , it may also allow some society to monetize access to their data to third parties , for example .

“ In the stock market today , 70 % of dealing are made by AI , ” order Gigi Levy - Weiss , NFX ecumenical partner and co - founder . “ That ’s a taste of thing to fare , and organization who adopt AI today will be a step ahead tomorrow . But party are afraid to unite their information to AI , because they venerate the picture — and for beneficial reasons . PVML ’s unique technology make an invisible bed of protection and democratizes access to data , enabling monetisation exercise cases today and paving the way of life for tomorrow . ”