Topics

Latest

AI

Amazon

Image Credits:Tomohiro Ohsumi / Getty Images

Apps

Biotech & Health

mood

Image Credits:EPFL

Cloud Computing

mercantilism

Crypto

Image Credits:Stanford

enterprisingness

EVs

Fintech

Fundraising

contrivance

Gaming

Government & Policy

Hardware

layoff

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

Security

Social

Space

startup

TikTok

Transportation

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

touch Us

Keeping up with an industry as fast - go asAIis a magniloquent order . So until an AI can do it for you , here ’s a ready to hand roundup of recent stories in the world of machine learnedness , along with famous research and experiments we did n’t cover on their own .

This calendar week in AI , Googlepausedits AI chatbot Gemini ’s power to bring forth images of mass after a section of user complained about historic inaccuracies . Told to show “ a Roman host , ” for illustration , Gemini would show an anachronistic , cartoonish chemical group of racially diverse foot soldier while fork out “ Zulu warriors ” as Black .

It appears that Google — like some other AI vendor , including OpenAI — had implement clumsy hardcoding under the hood to attempt to “ right ” for bias in its model . In response to prompts like “ show me images of only women ” or “ show me images of only men , ” Gemini would refuse , put forward such images could “ add to the exclusion and marginalization of other sexuality . ” Gemini was also loth to generate icon of people identified solely by their race — e.g. “ white people ” or “ inglorious hoi polloi ” — out of seeming concern for “ trim individuals to their forcible characteristic . ”

Right wingers have latch on to the germ as evidence of a “ woke ” agenda being perpetuated by the tech elite . But it does n’t take Occam ’s razor to see the less villainous truth : Google , burned by its tools ’ biases before ( see : classifying Black human beings as gorilla , mistake thermal guns in fatal people ’s handsas weapons , etc . ) , is so desperate to avoid story repeat itself that it ’s manifesting a less one-sided world in its image - generate models — however erroneous .

In her best - deal playscript “ White Fragility , ” anti - antiblack educator Robin DiAngelo write about how the erasure of subspecies — “ colour blindness , ” by another idiom — chip in to systemic racial exponent imbalances rather than mitigating or ease them . By purport to “ not see vividness ” or reinforce the notion that just recognise the struggle of people of other races is sufficient to mark oneself “ woke , ” peopleperpetuateharm by avoiding any substantive preservation on the issue , DiAngelo says .

Google ’s ginger treatment of backwash - based prompts in Gemini did n’t avoid the issue , per se — but disingenuously attempted to hold in the worst of the mannikin ’s biases . One could fence ( and many have ) that these prejudice should n’t be ignored or glossed over , but handle in the broader context of the training datum from which they arise — i.e. society on the world wide vane .

Yes , the datasets used to civilize image generators mostly comprise more ashen multitude than Black people , and yes , the icon of disgraceful people in those information set reinforce negative stereotypes . That ’s why image generatorssexualize certain women of colour , depict white men in place of authorityand mostly favorwealthy Western perspectives .

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

Some may argue that there ’s no winning for AI vendors . Whether they tackle — or choose not to tackle — exemplar ’ biases , they ’ll be criticized . And that ’s genuine . But I put forward that , either way , these model are lacking in explanation — packaged in a manner that minimize the way in which their preconception manifest .

Were AI seller to come up to their models ’ shortcomings manoeuver on , in humble and limpid language , it ’d go a muckle further than haphazard attempts at “ fix ” what ’s basically unfixable bias . We all have bias , the truth is — and we do n’t care for people the same as a result . Nor do the models we ’re establish . And we ’d do well to acknowledge that .

‘ Embarrassing and wrong ’ : Google take it fall back control of image - generating AI

Here are some other AI stories of note from the preceding few daytime :

More machine learnings

AI models seem to know a mickle , but what do they actually know ? Well , the answer is nothing . But if you phrase the motion slightly differently … they do seem to have internalized some “ meanings ” that are similar to what humans know . Although no AI truly understand what a cat or a blackguard is , could it have some sense of similarity encoded in its embeddings of those two words that is different from , say , cat and bottle?Amazon researcher consider so .

Their research compared the “ trajectories ” of similar but distinguishable sentences , like “ the dog bark at the burglar ” and “ the burglar do the pawl to barque , ” with those of grammatically exchangeable but dissimilar sentences , like “ a computerized axial tomography sleeps all twenty-four hours ” and “ a girl jogs all good afternoon . ” They found that the ones humans would find like were indeed internally treated as more like despite being grammatically different , and vice versa for the grammatically exchangeable one . OK , I feel like this paragraph was a little confusing , but answer it to say that the meaning encoded in Master of Laws appear to be more robust and advanced than anticipate , not totally naïve .

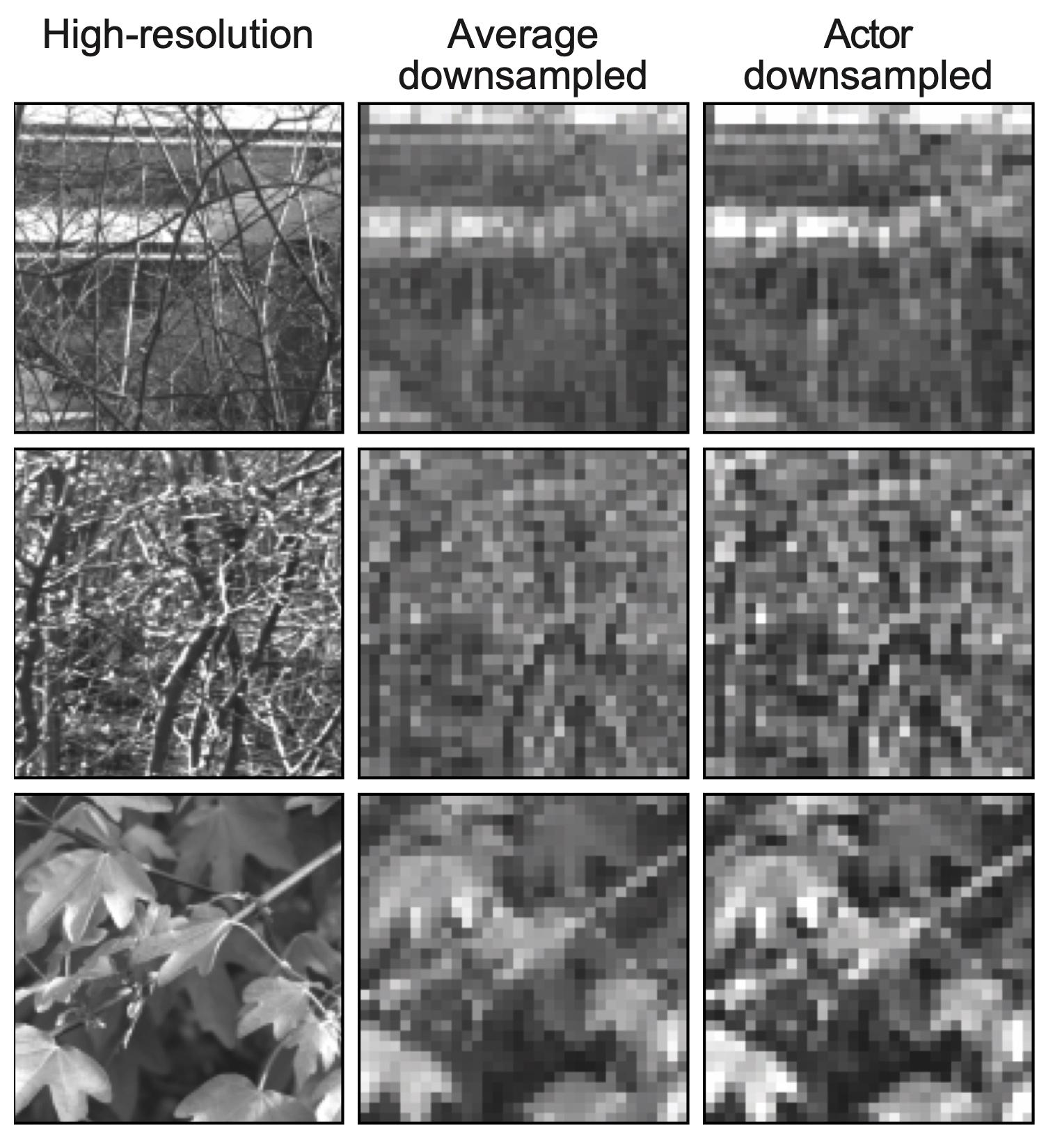

nervous encryption is proving useful in prosthetic visual sense , Swiss investigator at EPFL have find . Artificial retinas and other way of supersede parts of the human visual system in general have very limited resolution due to the limitations of microelectrode arrays . So no matter how elaborate the image is come in , it has to be transmitted at a very low faithfulness . But there are different ways of downsampling , and this squad found that machine learning does a slap-up business at it .

“ We found that if we applied a encyclopaedism - found approach , we got improved results in term of optimized receptive encryption . But more surprising was that when we used an unconstrained neural web , it learn to mimic face of retinal processing on its own , ” say Diego Ghezzi in a word release . It does perceptual compression , basically . They test it on mouse retinas , so it is n’t just theoretical .

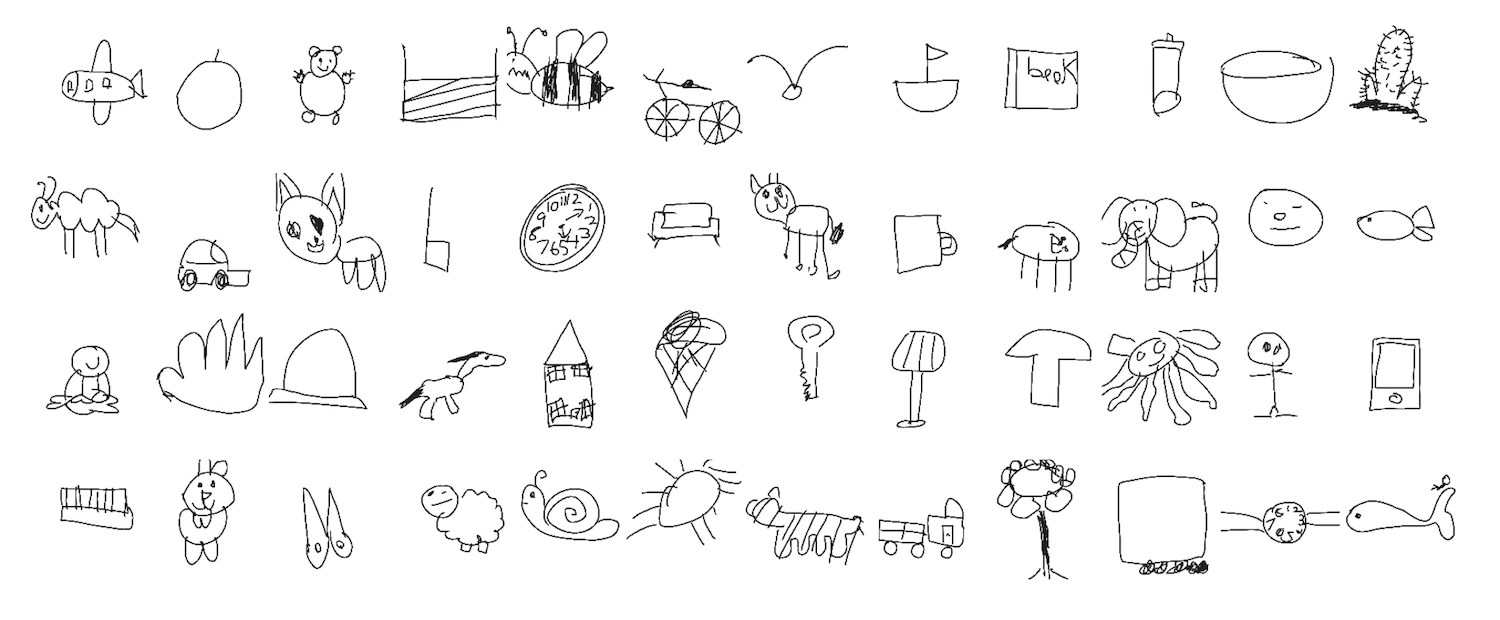

An interesting diligence of calculator vision by Stanford researcher suggest at a mystery story in how children develop their drawing acquisition . The team solicited and analyzed 37,000 drawings by tike of various objects and animal , and also ( based on child ’ responses ) how recognisable each draftsmanship was . Interestingly , it was n’t just the cellular inclusion of signature features like a hare ’s ears that made drawings more placeable by other kid .

“ The kinds of feature that lead drawings from onetime children to be placeable do n’t seem to be driven by just a single feature that all the old kids learn to include in their drafting . It ’s something much more complex that these machine encyclopedism systems are picking up on , ” said lead researcher Judith Fan .

Chemists ( also at EPFL ) foundthat Master of Laws are also astonishingly adept at helping out with their work after minimum breeding . It ’s not just doing chemical science directly , but rather being all right - tune on a soundbox of study that chemists on an individual basis ca n’t maybe have intercourse all of . For instance , in 1000 of papers there may be a few hundred statements about whether a mellow - randomness alloy is single or multiple phase angle ( you do n’t have to know what this means — they do ) . The arrangement ( base on GPT-3 ) can be trained on this type of yes / no question and answer , and soon is capable to generalize from that .

It ’s not some immense advance , just more evidence that LLM are a useful tool in this signified . “ The point is that this is as sluttish as doing a literature lookup , which work for many chemical substance problems , ” said researcher Berend Smit . “ Querying a foundational good example might become a routine manner to bootstrap a project . ”

Last , a parole of caution from Berkeley researcher , though now that I ’m reading the Wiley Post again I see EPFL was postulate with this one too . Go Lausanne ! The group ground that imagery found via Google was much more likely to implement gender stereotypes for sealed line of work and word than text cite the same thing . And there were also just way more men present in both case .

Not only that , but in an experimentation , they found that people who viewed images rather than reading text when research a persona associated those roles with one sex more reliably , even days later . “ This is n’t only about the frequency of sexuality bias online , ” said researcher Douglas Guilbeault . “ Part of the story here is that there ’s something very viscid , very strong about image ’ mental representation of people that text just does n’t have . ”

With stuff like the Google persona generator diversity altercation go on , it ’s light to turn a loss sight of the established and frequently verify fact that the reference of data for many AI framework shows serious diagonal , and this preconception has a veridical effect on people .