Topics

Latest

AI

Amazon

Image Credits:sergeyskleznev(opens in a new window)/ Getty Images

Apps

Biotech & Health

Climate

Image Credits:sergeyskleznev(opens in a new window)/ Getty Images

Cloud Computing

Commerce

Crypto

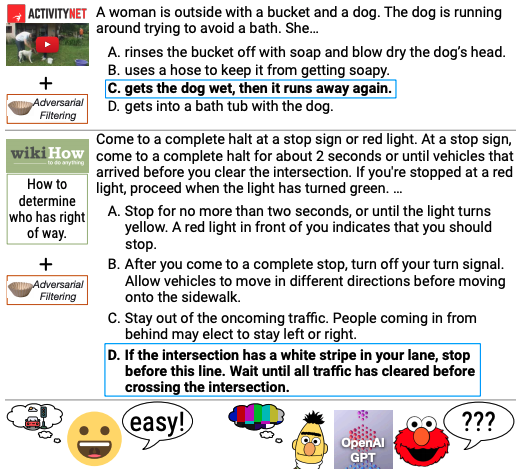

Test questions from the HellaSwag benchmark.

Enterprise

EVs

Fintech

fund raise

gizmo

Gaming

Government & Policy

ironware

Layoffs

Media & Entertainment

Meta

Microsoft

Privacy

Robotics

Security

Social

quad

Startups

TikTok

Transportation

Venture

More from TechCrunch

Events

Startup Battlefield

StrictlyVC

Podcasts

Videos

Partner Content

TechCrunch Brand Studio

Crunchboard

reach Us

On Tuesday , startup Anthropicreleaseda family of generative AI simulation that it claim achieve intimately - in - year performance . Just a few days after , rivalInflection AIunveiled a example that it asserts come close to matching some of the most capable model out there , including OpenAI’sGPT-4 , in quality .

Anthropic and Inflection are by no agency the first AI firms to contend their manikin have the competition met or beat by some objective measure . Google argue the same of itsGeminimodels at their release , and OpenAI enounce it of GPT-4 and its predecessors , GPT-3,GPT-2and GPT-1 . Thelistgoeson .

But what metrics are they let the cat out of the bag about ? When a vendor say a model achieves state - of - the - art performance or tone , what ’s that mean , exactly ? Perhaps more to the point : Will a mannikin that technically “ perform ” well than some other model actuallyfeelimproved in a real room ?

On that last question , not probable .

The reason — or rather , the job — lies with the benchmarks AI companies use to quantify a model ’s strengths — and helplessness .

Esoteric measures

The most unremarkably used benchmark today for AI simulation — specifically chatbot - power good example like OpenAI’sChatGPTand Anthropic’sClaude — do a poor job of capturing how the median person interact with the model being tested . For lesson , one bench mark bring up by Anthropic in its late announcement , GPQA ( “ A Graduate - Level Google - Proof Q&A Benchmark ” ) , contains hundreds of Ph . D.-level biological science , physics and chemistry query — yet most the great unwashed use chatbots for chore likeresponding to emails , writing cover charge lettersandtalking about their feelings .

Jesse Dodge , a scientist at the Allen Institute for AI , the AI inquiry nonprofit organization , says that the industry has reached an “ evaluation crises . ”

Join us at TechCrunch Sessions: AI

Exhibit at TechCrunch Sessions: AI

“ bench mark are typically static and narrowly focused on evaluating a single capability , like a model ’s factualness in a single sphere , or its ability to puzzle out numerical logical thinking multiple option questions , ” Dodge recount TechCrunch in an audience . “ Many bench mark used for evaluation are three - plus age old , from when AI systems were mostly just used for research and did n’t have many genuine users . In gain , people use generative AI in many way — they ’re very creative . ”

The wrong metrics

It ’s not that the most - used benchmarks are all useless . Someone ’s doubtless ask ChatGPT Ph . D.-level maths interrogative sentence . However , as generative AI role model are more and more positioned as passel market , “ do - it - all ” systems , old benchmark are becoming less applicable .

David Widder , a postdoctoral investigator at Cornell canvass AI and moral principle , notes that many of the skills common bench mark test — from solving gradation school - spirit level mathematics problem to identifying whether a sentence incorporate an anachronism — will never be relevant to the majority of drug user .

“ Older AI system were often built to solve a particular job in a context ( e.g. medical AI expert systems ) , have a profoundly contextual understanding of what constitutes honorable public presentation in that special circumstance more possible , ” Widder told TechCrunch . “ As system are increasingly see as ‘ general purpose , ’ this is less possible , so we increasingly see a focal point on testing models on a variety of benchmarks across different fields . ”

Errors and other flaws

Misalignment with the use type away , there ’s questions as to whether some benchmarks even properly measure what they aim to evaluate .

Ananalysisof HellaSwag , a test design to evaluate commonsense abstract thought in models , found that more than a third of the mental test question contained literal error and “ nonsensical ” penning . Elsewhere , MMLU ( scant for “ Massive Multitask Language Understanding ” ) , a bench mark that ’s been pointed to by vender let in Google , OpenAI and Anthropic as evidence their models can reason out through logic problems , need question that can be solved through rote memorization .

“ [ Benchmarks like MMLU are ] more about memorise and consociate two keywords together , ” Widder said . “ I can determine [ a relevant ] article fair quickly and answer the question , but that does n’t mean I sympathise the causal mechanism , or could utilise an understanding of this causal mechanism to actually conclude through and work out new and complex problems in unforseen contexts . A model ca n’t either . ”

Fixing what’s broken

So benchmark are break . But can they be fixed ?

Dodge thinks so — with more human involvement .

“ The good path onward , here , is a combination of evaluation benchmark with human rating , ” he said , “ prompting a simulation with a real drug user query and then hiring a mortal to rate how good the response is . ”

As for Widder , he ’s less optimistic that benchmarks today — even with fixes for the more obvious errors , like typos — can be improved to the point where they ’d be instructive for the vast majority of generative AI model user . or else , he thinks that tests of models should focus on the downstream impacts of these models and whether the impacts , honorable or bad , are perceived as desirable to those impacted .

“ I ’d need which specific contextual finish we need AI models to be able to be used for and evaluate whether they ’d be — or are — successful in such contexts , ” he say . “ And hopefully , too , that process involves evaluating whether we should be using AI in such context of use . ”